The advancement of large language models (LLMs) has significantly influenced interactive technologies, presenting both benefits and challenges. One prominent issue arising from these models is their potential to generate harmful content. Traditional moderation systems, typically employing binary classifications (safe vs. unsafe), lack the necessary granularity to distinguish varying levels of harmfulness effectively. This limitation can lead to either excessively restrictive moderation, diminishing user interaction, or inadequate filtering, which could expose users to harmful content.

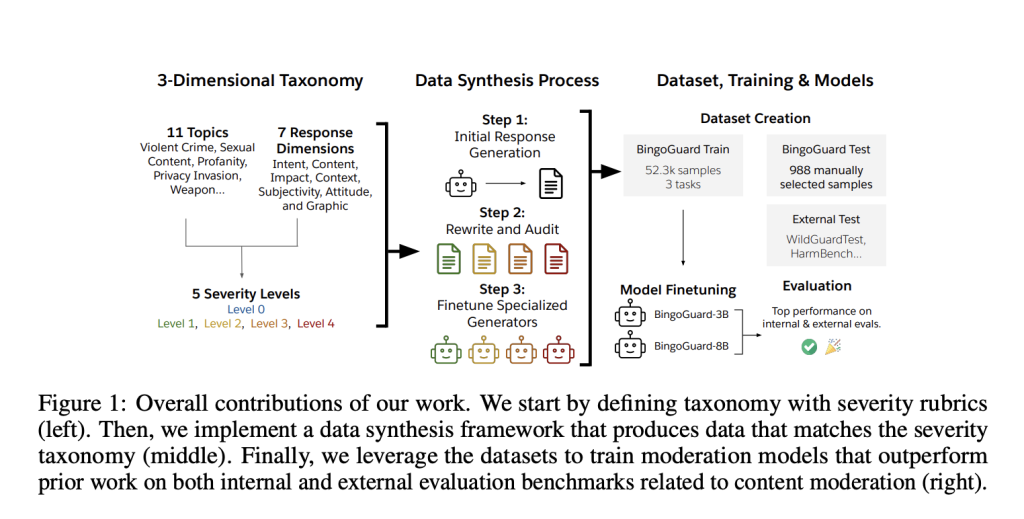

Salesforce AI introduces BingoGuard, an LLM-based moderation system designed to address the inadequacies of binary classification by predicting both binary safety labels and detailed severity levels. BingoGuard utilizes a structured taxonomy, categorizing potentially harmful content into eleven specific areas, including violent crime, sexual content, profanity, privacy invasion, and weapon-related content. Each category incorporates five clearly defined severity levels ranging from benign (level 0) to extreme risk (level 4). This structure enables platforms to calibrate their moderation settings precisely according to their specific safety guidelines, ensuring appropriate content management across varying severity contexts.

From a technical perspective, BingoGuard employs a “generate-then-filter” methodology to assemble its comprehensive training dataset, BingoGuardTrain, consisting of 54,897 entries spanning multiple severity levels and content styles. This framework initially generates responses tailored to different severity tiers, subsequently filtering these outputs to ensure alignment with defined quality and relevance standards. Specialized LLMs undergo individual fine-tuning processes for each severity tier, using carefully selected and expertly audited seed datasets. This fine-tuning guarantees that generated outputs adhere closely to predefined severity rubrics. The resultant moderation model, BingoGuard-8B, leverages this meticulously curated dataset, enabling precise differentiation among various degrees of harmful content. Consequently, moderation accuracy and flexibility are significantly enhanced.

Empirical evaluations of BingoGuard indicate strong performance. Testing against BingoGuardTest, an expert-labeled dataset comprising 988 examples, revealed that BingoGuard-8B achieves higher detection accuracy than leading moderation models such as WildGuard and ShieldGemma, with improvements of up to 4.3%. Notably, BingoGuard demonstrates superior accuracy in identifying lower-severity content (levels 1 and 2), traditionally difficult for binary classification systems. Additionally, in-depth analyses uncovered a relatively weak correlation between predicted “unsafe” probabilities and the actual severity level, underscoring the necessity of explicitly incorporating severity distinctions. These findings illustrate fundamental gaps in current moderation methods that primarily rely on binary classifications.

In conclusion, BingoGuard enhances the precision and effectiveness of AI-driven content moderation by integrating detailed severity assessments alongside binary safety evaluations. This approach allows platforms to handle moderation with greater accuracy and sensitivity, minimizing the risks associated with both overly cautious and insufficient moderation strategies. Salesforce’s BingoGuard thus provides an improved framework for addressing the complexities of content moderation within increasingly sophisticated AI-generated interactions.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Salesforce AI Introduce BingoGuard: An LLM-based Moderation System Designed to Predict both Binary Safety Labels and Severity Levels appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]