In today’s information-rich digital landscape, navigating extensive web content can be overwhelming. Whether you’re researching for a project, studying complex material, or trying to extract specific information from lengthy articles, the process can be time-consuming and inefficient. This is where an AI-powered Question-Answering (Q&A) bot becomes invaluable.

This tutorial will guide you through building a practical AI Q&A system that can analyze webpage content and answer specific questions. Instead of relying on expensive API services, we’ll utilize open-source models from Hugging Face to create a solution that’s:

- Completely free to use

- Runs in Google Colab (no local setup required)

- Customizable to your specific needs

- Built on cutting-edge NLP technology

By the end of this tutorial, you’ll have a functional web Q&A system that can help you extract insights from online content more efficiently.

What We’ll Build

We’ll create a system that:

- Takes a URL as input

- Extracts and processes the webpage content

- Accepts natural language questions about the content

- Provides accurate, contextual answers based on the webpage

Prerequisites

- A Google account to access Google Colab

- Basic understanding of Python

- No prior machine learning knowledge required

Step 1: Setting Up the Environment

First, let’s create a new Google Colab notebook. Go to Google Colab and create a new notebook.

Let’s start by installing the necessary libraries:

# Install required packages

!pip install transformers torch beautifulsoup4 requestsThis installs:

- transformers: Hugging Face’s library for state-of-the-art NLP models

- torch: PyTorch deep learning framework

- beautifulsoup4: For parsing HTML and extracting web content

- requests: For making HTTP requests to webpages

Step 2: Import Libraries and Set Up Basic Functions

Now let’s import all the necessary libraries and define some helper functions:

import torch

from transformers import AutoModelForQuestionAnswering, AutoTokenizer

import requests

from bs4 import BeautifulSoup

import re

import textwrap# Check if GPU is available

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(f"Using device: {device}")# Function to extract text from a webpage

def extract_text_from_url(url):

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

response = requests.get(url, headers=headers)

response.raise_for_status()

soup = BeautifulSoup(response.text, 'html.parser')

for script_or_style in soup(['script', 'style', 'header', 'footer', 'nav']):

script_or_style.decompose()

text = soup.get_text()

lines = (line.strip() for line in text.splitlines())

chunks = (phrase.strip() for line in lines for phrase in line.split(" "))

text = 'n'.join(chunk for chunk in chunks if chunk)

text = re.sub(r's+', ' ', text).strip()

return text

except Exception as e:

print(f"Error extracting text from URL: {e}")

return NoneThis code:

- Imports all necessary libraries

- Sets up our device (GPU if available, otherwise CPU)

- Creates a function to extract readable text content from a webpage URL

Step 3: Load the Question-Answering Model

Now let’s load a pre-trained question-answering model from Hugging Face:

# Load pre-trained model and tokenizer

model_name = "deepset/roberta-base-squad2"

print(f"Loading model: {model_name}")

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForQuestionAnswering.from_pretrained(model_name).to(device)

print("Model loaded successfully!")We’re using deepset/roberta-base-squad2, which is:

- Based on RoBERTa architecture (a robustly optimized BERT approach)

- Fine-tuned on SQuAD 2.0 (Stanford Question Answering Dataset)

- A good balance between accuracy and speed for our task

Step 4: Implement the Question-Answering Function

Now, let’s implement the core functionality – the ability to answer questions based on the extracted webpage content:

def answer_question(question, context, max_length=512):

max_chunk_size = max_length - len(tokenizer.encode(question)) - 5

all_answers = []

for i in range(0, len(context), max_chunk_size):

chunk = context[i:i + max_chunk_size]

inputs = tokenizer(

question,

chunk,

add_special_tokens=True,

return_tensors="pt",

max_length=max_length,

truncation=True

).to(device)

with torch.no_grad():

outputs = model(**inputs)

answer_start = torch.argmax(outputs.start_logits)

answer_end = torch.argmax(outputs.end_logits)

start_score = outputs.start_logits[0][answer_start].item()

end_score = outputs.end_logits[0][answer_end].item()

score = start_score + end_score

input_ids = inputs.input_ids.tolist()[0]

tokens = tokenizer.convert_ids_to_tokens(input_ids)

answer = tokenizer.convert_tokens_to_string(tokens[answer_start:answer_end+1])

answer = answer.replace("[CLS]", "").replace("[SEP]", "").strip()

if answer and len(answer) > 2:

all_answers.append((answer, score))

if all_answers:

all_answers.sort(key=lambda x: x[1], reverse=True)

return all_answers[0][0]

else:

return "I couldn't find an answer in the provided content."This function:

- Takes a question and the webpage content as input

- Handles long content by processing it in chunks

- Uses the model to predict the answer span (start and end positions)

- Processes multiple chunks and returns the answer with the highest confidence score

Step 5: Testing and Examples

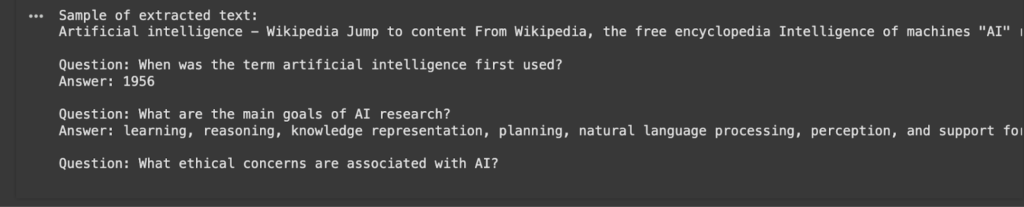

Let’s test our system with some examples. Here’s the complete code:

url = "https://en.wikipedia.org/wiki/Artificial_intelligence"

webpage_text = extract_text_from_url(url)

print("Sample of extracted text:")

print(webpage_text[:500] + "...")

questions = [

"When was the term artificial intelligence first used?",

"What are the main goals of AI research?",

"What ethical concerns are associated with AI?"

]

for question in questions:

print(f"nQuestion: {question}")

answer = answer_question(question, webpage_text)

print(f"Answer: {answer}")This will demonstrate how the system works with real examples.

Limitations and Future Improvements

Our current implementation has some limitations:

- It can struggle with very long webpages due to context length limitations

- The model may not understand complex or ambiguous questions

- It works best with factual content rather than opinions or subjective material

Future improvements could include:

- Implementing semantic search to better handle long documents

- Adding document summarization capabilities

- Supporting multiple languages

- Implementing memory of previous questions and answers

- Fine-tuning the model on specific domains (e.g., medical, legal, technical)

Conclusion

Now you’ve successfully built your AI-powered Q&A system for webpages using open-source models. This tool can help you:

- Extract specific information from lengthy articles

- Research more efficiently

- Get quick answers from complex documents

By utilizing Hugging Face’s powerful models and the flexibility of Google Colab, you’ve created a practical application that demonstrates the capabilities of modern NLP. Feel free to customize and extend this project to meet your specific needs.

Useful Resources

- Hugging Face Transformers Documentation

- More about Question Answering Models

- SQuAD Dataset Information

- BeautifulSoup Documentation

Here is the Colab Notebook. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 85k+ ML SubReddit.

The post Building Your AI Q&A Bot for Webpages Using Open Source AI Models appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]