What sets large language models (LLMs) apart from traditional methods is their emerging capacity to reflect—recognizing when something in their response doesn’t align with logic or facts and then attempting to fix it. This ability, referred to as reflection, mirrors a form of machine-based metacognition. Its presence indicates a leap from surface-level processing to deeper evaluative reasoning, which is increasingly essential in complex, multi-step tasks like code synthesis and mathematical reasoning.

A central challenge with language models is identifying the point in their training when they demonstrate the ability to reflect on their reasoning. Many believe that reflection only emerges after reinforcement learning is applied post-pre-training. However, reflection could arise earlier, during pre-training itself. This brings up the problem of how to detect and measure such reflective tendencies in a consistent, replicable way. Traditional benchmarks often fail to catch this because they do not include reasoning chains that contain subtle errors that require correction. As a result, models are rarely assessed on how they adapt their outputs when presented with incorrect or misleading reasoning patterns.

To approach this challenge, several tools have been developed to evaluate reasoning, including prompting frameworks like Chain of Thought and Tree of Thought. These rely on observing final outputs or exploring activation pathways in the model’s architecture. While useful, these methods generally examine models after fine-tuning or being subjected to additional optimization. They miss exploring how reflective behavior forms organically during early model training. In most evaluations, reflection is treated as a post-training phenomenon, with little emphasis on its emergence during the vast and formative pre-training stage.

Researchers at Essential AI in San Francisco introduced a unique solution to explore this gap. They developed a framework that measures situational reflection and self-reflection using deliberately corrupted chains of thought. These adversarial datasets span six domains: coding, mathematical reasoning, logical analysis, and knowledge retrieval. The datasets are constructed to include errors that mimic realistic mistakes, such as faulty logic or miscalculations, which the models must detect and correct. The project utilized models from the OLMo-2 and Qwen2.5 families, with parameter sizes ranging from 0.5B to 72B. Trigger phrases like “Wait” were inserted in prompts to encourage the model to examine the provided reasoning and respond accordingly critically.

Delving into how the reflection mechanism works, the researchers categorized it as either explicit or implicit. Explicit reflection occurs when the model verbalizes its realization of a mistake. Implicit reflection is inferred when the model arrives at the correct answer without overtly acknowledging an error. The dataset generation algorithms took correct reasoning chains from established benchmarks and injected small but critical faults. For situational reflection, errors came from different models. For self-reflection, they emerged from the model’s incorrect outputs. A classifier trained with DeepSeek-V3 was then used to detect signs of explicit reflection across outputs, allowing precise differentiation between the two reflection types.

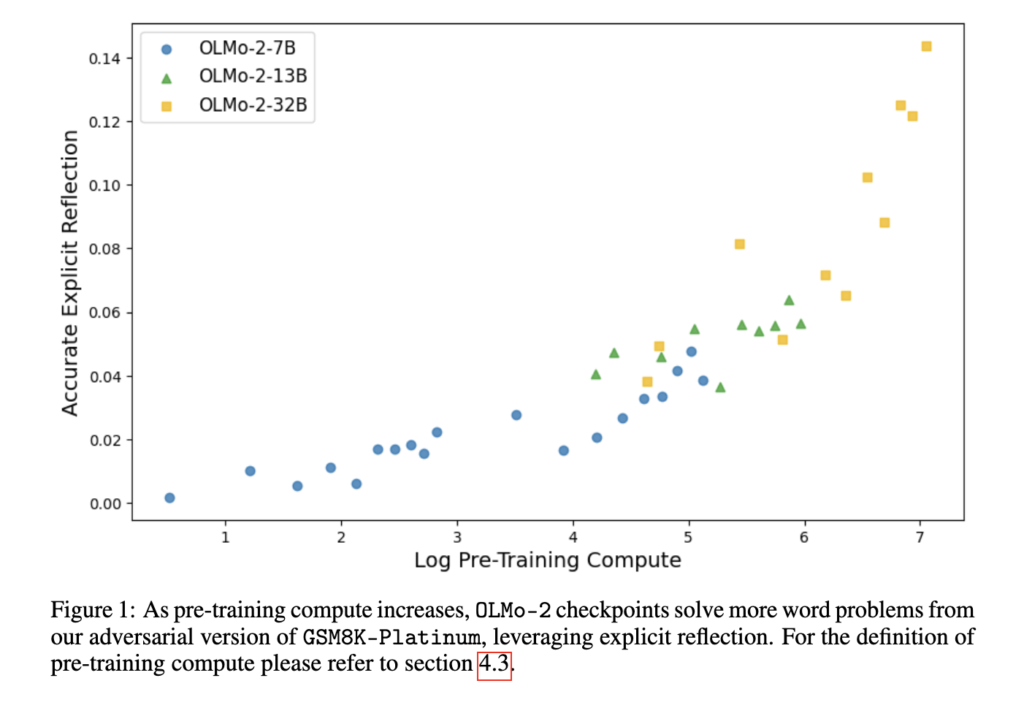

The performance of the models provided clear insights. Of 240 evaluated dataset checkpoint combinations, 231 showed evidence of situational reflection, and 154 demonstrated at least one instance of self-reflection. The Pearson correlation between accuracy and pre-training compute reached 0.76, signaling a strong relationship between compute intensity and reflective reasoning. In tasks like GSM8K-Platinum, using the “Wait” trigger improved performance substantially, showing that even a simple prompt can enhance a model’s accuracy by encouraging self-examination. Across checkpoints, the rate of explicit reflection increased with more training, reinforcing the claim that reflection can be developed during pre-training without needing further fine-tuning or reinforcement learning.

From this work, it becomes evident that reflective reasoning is not merely an outcome of advanced optimization. Instead, it is a capacity that begins to take shape during the foundational training of language models. By engineering a system to measure and encourage this ability, the researchers effectively spotlighted a new dimension of model training that could significantly influence future developments in AI reasoning and decision-making.

Check out Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit.

The post Reflection Begins in Pre-Training: Essential AI Researchers Demonstrate Early Emergence of Reflective Reasoning in LLMs Using Adversarial Datasets appeared first on MarkTechPost.

Source: Read MoreÂ