Reasoning capabilities have become central to advancements in large language models, crucial in leading AI systems developed by major research labs. Despite a surge in research focused on understanding and enhancing LLM reasoning abilities, significant methodological challenges persist in evaluating these capabilities accurately. The field faces growing concerns regarding evaluation rigor as non-reproducible or inconclusive assessments risk distorting scientific understanding, misguiding adoption decisions, and skewing future research priorities. In the rapidly evolving landscape of LLM reasoning, where quick publication cycles and benchmarking competitions are commonplace, methodological shortcuts can silently undermine genuine progress. While reproducibility issues in LLM evaluations have been documented, their continued presence—particularly in reasoning tasks—demands heightened scrutiny and more stringent evaluation standards to ensure that reported advances reflect genuine capabilities rather than artifacts of flawed assessment methodologies.

Numerous approaches have emerged to enhance reasoning capabilities in language models, with supervised fine-tuning (SFT) and reinforcement learning (RL) being the primary methods of interest. Recent innovations have expanded upon the DeepSeek-R1 recipe through innovative RL algorithms like LCPO, REINFORCE++, DAPO, and VinePPO. Researchers have also conducted empirical studies exploring RL design spaces, data scaling trends, curricula, and reward mechanisms. Despite these advancements, the field faces significant evaluation challenges. Machine learning progress often lacks rigorous assessment, with many reported gains failing to hold up when tested against well-tuned baselines. RL algorithms are particularly susceptible to variations in implementation details, including random seeds, raising concerns about the reliability of benchmarking practices.

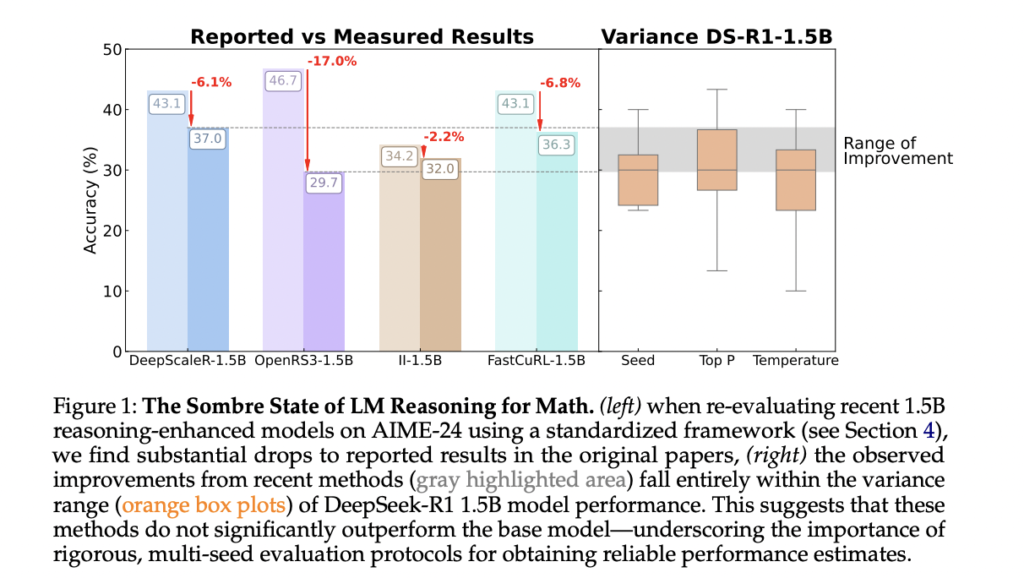

Motivated by inconsistent claims in reasoning research, this study by researchers from Tübingen AI Center, University of Tübingen and University of Cambridge conducts a rigorous investigation into mathematical reasoning benchmarks, revealing that many recent empirical conclusions fail under careful re-evaluation. The analysis identifies surprising sensitivity in LLM reasoning pipelines to minor design choices, including decoding parameters, prompt formatting, random seeds, and hardware configurations. Small benchmark sizes contribute significantly to this instability, with single questions potentially shifting Pass@1 scores by over 3 percentage points on datasets like AIME’24 and AMC’23. This leads to double-digit performance variations across seeds, undermining published results. The study systematically analyzes these instability sources and proposes best practices for improving reproducibility and rigor in reasoning evaluations, providing a standardized framework for re-evaluating recent techniques under more controlled conditions.

The study explores design factors affecting reasoning performance in language models through a standardized experimental framework. Nine widely used models across 1.5B and 7B parameter classes were evaluated, including DeepSeek-R1-Distill variants, DeepScaleR-1.5B, II-1.5 B-Preview, OpenRS models, S1.1-7B, and OpenThinker7B. Using consistent hardware (A100 GPU, AMD CPU) and software configurations, models were benchmarked on AIME’24, AMC’23, and MATH500 datasets using Pass@1 metrics. The analysis revealed significant performance variance across random seeds, with standard deviations ranging from 5 to 15 percentage points. This instability is particularly pronounced in smaller datasets where a single question can shift performance by 2.5-3.3 percentage points, making single-seed evaluations unreliable.

Based on rigorous standardized evaluations, the study reveals several key findings about current reasoning methodologies in language models. Most RL-trained variants of the DeepSeek R1-Distill model fail to deliver meaningful performance improvements, with only DeepScaleR demonstrating robust, significant gains across benchmarks. While RL training can substantially improve base model performance when applied to models like Qwen2.5, instruction tuning generally remains superior, with Open Reasoner-Zero-7B being the notable exception. In contrast, SFT consistently outperforms instruction-tuned baselines across all benchmarks and generalizes well to new datasets like AIME’25, highlighting its robustness as a training paradigm. RL-trained models show pronounced performance drops between AIME’24 and the more challenging AIME’25, indicating problematic overfitting to training distributions. Additional phenomena investigated include the correlation between response length and accuracy, with longer responses consistently showing higher error rates across all model types.

This comprehensive analysis reveals that apparent progress in LLM-based reasoning has been built on unstable foundations, with performance metrics susceptible to minor variations in evaluation protocols. The investigation demonstrates that reinforcement learning approaches yield modest improvements at best and frequently exhibit overfitting to specific benchmarks, while supervised fine-tuning consistently delivers robust, generalizable performance gains. To establish more reliable assessment standards, standardized evaluation frameworks with Dockerized environments, seed-averaged metrics, and transparent protocols are essential. These findings highlight the critical need for methodological rigor over leaderboard competition to ensure that claimed advances in reasoning capabilities reflect genuine progress rather than artifacts of inconsistent evaluation practices.

Here is the Paper, GitHub Page and Leaderboard. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post LLM Reasoning Benchmarks are Statistically Fragile: New Study Shows Reinforcement Learning RL Gains often Fall within Random Variance appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop