The integration of Large Language Models (LLMs) with external tools, applications, and data sources is increasingly vital. Two significant methods for achieving seamless interaction between models and external systems are Model Context Protocol (MCP) and Function Calling. Although both approaches aim to expand the practical capabilities of AI models, they differ fundamentally in their architectural design, implementation strategies, intended use cases, and overall flexibility.

Model Context Protocol (MCP)

Anthropic introduced the Model Context Protocol (MCP) as an open standard designed to facilitate structured interactions between AI models and various external systems. MCP emerged in response to the growing complexity associated with integrating AI-driven capabilities into diverse software environments. By establishing a unified approach, MCP significantly reduces the need for bespoke integrations, offering a common, interoperable framework that promotes efficiency and consistency.

Initially driven by the limitations encountered in integrating AI within large-scale enterprises and software development environments, MCP aimed to provide a robust solution to ensure scalability, interoperability, and enhanced security. Its development was influenced by practical challenges observed within industry-standard practices, particularly around managing sensitive data, ensuring seamless communication, and maintaining robust security.

Detailed Architectural Breakdown

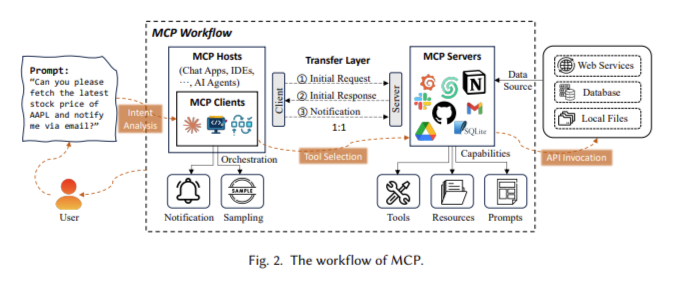

At its core, MCP employs a sophisticated client-server architecture comprising three integral components:

- Host Process: This is the initiating entity, typically an AI assistant or an embedded AI-driven application. It controls and orchestrates the flow of requests, ensuring the integrity of communication.

- MCP Clients: These intermediaries manage requests and responses. Clients play crucial roles, including message encoding and decoding, initiating requests, handling responses, and managing errors.

- MCP Servers: These represent external systems or data sources that are structured to expose their data or functionality through standardized interfaces and schemas. They manage incoming requests from clients, execute necessary operations, and return structured responses.

Communication is facilitated through the JSON-RPC 2.0 protocol, renowned for its simplicity and effectiveness in remote procedure calls. This lightweight protocol enables MCP to remain agile, facilitating rapid integration and efficient message transmission. Also, MCP supports various transport protocols, including standard input/output (stdio) and HTTP, and utilizes Server-Sent Events (SSE) for asynchronous interactions, thereby enhancing its versatility and responsiveness.

Security Model

Security forms a cornerstone of the MCP design, emphasizing a rigorous, host-mediated approach. This model incorporates:

- Process Sandboxing: Each MCP server process operates in an isolated sandboxed environment, ensuring robust protection against unauthorized access and minimizing vulnerabilities.

- Path Restrictions: Strictly controlled access policies limit server interactions to predetermined file paths or system resources, significantly reducing the potential attack surface.

- Encrypted Transport: Communication is secured using strong encryption methods, ensuring that data confidentiality, integrity, and authenticity are maintained throughout interactions.

Scalability and Performance

MCP is uniquely positioned to handle complex, large-scale implementations due to its inherent scalability features. By adopting asynchronous execution and an event-driven architecture, MCP efficiently manages simultaneous requests, supports parallel operations, and ensures minimal latency. These features make MCP an ideal choice for large enterprises that require high-performance AI integration into mission-critical systems.

Application Domains

The adaptability of MCP has led to widespread adoption across multiple sectors. In the domain of software development, MCP has been extensively integrated into various platforms and Integrated Development Environments (IDEs). This integration enables real-time, context-aware coding assistance, significantly enhancing developer productivity, accuracy, and efficiency. By offering immediate suggestions, code completion, and intelligent error detection, MCP-enabled systems help developers rapidly identify and resolve issues, streamline coding processes, and maintain high code quality. Also, MCP is effectively deployed in enterprise solutions where internal AI assistants securely interact with proprietary databases and enterprise systems. These AI-driven solutions support enhanced decision-making processes by providing instant access to critical information, facilitating efficient data analysis, and enabling streamlined workflows, which collectively boost operational effectiveness and strategic agility.

Function Calling

Function Calling is a streamlined yet powerful approach that significantly enhances the operational capabilities of LLMs by enabling them to directly invoke and execute external functions in response to user input or contextual cues. Unlike traditional AI model interactions, which are limited to generating static text-based reactions based on their training data, Function Calling enables models to take action in real-time. When a user issues a prompt that implies or explicitly requests a specific task, such as checking the weather, querying a database, or triggering an API call, the model identifies the intent, selects the appropriate function from a predefined set, and formats the required parameters for execution. This dynamic linkage between natural language understanding and programmable actions effectively bridges the gap between conversational AI and software automation, effectively bridging the gap between conversational AI and software automation. As a result, Function Calling extends the functional utility of LLMs by transforming them from static knowledge providers into interactive agents capable of engaging with external systems, retrieving fresh data, executing live tasks, and delivering results that are both timely and contextually relevant.

Detailed Mechanism

The implementation of Function Calling involves several precise stages:

- Function Definition: Developers explicitly define the available functions, including detailed metadata such as the function name, required parameters, expected input formats, and return types. This clearly defined structure is crucial for the accurate and reliable execution of functions.

- Natural Language Parsing: Upon receiving user input, the AI model parses the natural language prompts meticulously to identify the correct function and the specific parameters required for execution.

Following these initial stages, the model generates a structured output, commonly in JSON format, detailing the function call, which is then executed externally. The execution results are fed back into the model, enabling further interactions or the generation of an immediate response.

Security and Access Management

Function Calling relies primarily on external security management practices, specifically API security and controlled execution environments. Key measures include:

- API Security: Implementation of robust authentication, authorization, and secure API key management systems to prevent unauthorized access and ensure secure interactions.

- Execution Control: Stringent management of function permissions and execution rights, safeguarding against potential misuse or malicious actions.

Flexibility and Extensibility

One of the major strengths of Function Calling is its inherent flexibility and modularity. Functions are individually managed and can be easily developed, tested, and updated independently of one another. This modularity enables organizations to quickly adapt to evolving requirements, adding or refining functions without significant disruption.

Practical Use Cases

Function Calling finds extensive use across a range of dynamic, task-oriented applications, most notably in the domains of conversational AI and automated workflows. In the context of conversational AI, Function Calling enables chatbots and virtual assistants to move beyond static, text-based interactions and instead perform meaningful actions in real time. These AI agents can dynamically schedule appointments, retrieve up-to-date weather or financial information, access personalized user data, or even interact with external databases to answer specific queries. This elevates their role from passive responders to active participants capable of handling complex user requests.

In automated workflows, Function Calling contributes to operational efficiency by enabling systems to perform tasks sequentially or in parallel based on predefined conditions or user prompts. For example, an AI system equipped with Function Calling capabilities could initiate a multi-step process such as invoice generation, email dispatch, and calendar updates, all triggered by a single user request. This level of automation is particularly beneficial in customer service, business operations, and IT support, where repetitive tasks can be offloaded to AI systems, allowing human resources to focus on strategic functions. Overall, the flexibility and actionability enabled by Function Calling make it a powerful tool in building intelligent, responsive AI-powered systems.

Comparative Analysis

MCP offers a comprehensive protocol suitable for extensive and complex integrations, particularly valuable in enterprise environments that require broad interoperability, robust security, and a scalable architecture. In contrast, Function Calling offers a simpler and more direct interaction method, suitable for applications that require rapid responses, task-specific operations, and straightforward implementations.

While MCP’s architecture involves higher initial setup complexity, including extensive infrastructure management, it ultimately provides greater security and scalability benefits. Conversely, Function Calling’s simplicity allows for faster integration, making it ideal for applications with limited scope or specific, task-oriented functionalities. From a security standpoint, MCP inherently incorporates stringent protections suitable for high-risk environments. Function Calling, though simpler, necessitates careful external management of security measures. Regarding scalability, MCP’s sophisticated asynchronous mechanisms efficiently handle large-scale, concurrent interactions, making it optimal for expansive, enterprise-grade solutions. Function Calling is effective in scalable contexts but requires careful management to avoid complexity as the number of functions increases.

| Criteria | Model Context Protocol (MCP) | Function Calling |

| Architecture | Complex client-server model | Simple direct function invocation |

| Implementation | Requires extensive setup and infrastructure | Quick and straightforward implementation |

| Security | Inherent, robust security measures | Relies on external security management |

| Scalability | Highly scalable, suited for extensive interactions | Scalable but complex with many functions |

| Flexibility | Broad interoperability for complex systems | Highly flexible for modular task execution |

| Use Case Suitability | Large-scale enterprise environments | Task-specific, dynamic interaction scenarios |

In conclusion, both MCP and Function Calling serve critical roles in enhancing LLM capabilities by providing structured pathways for external interactions. Organizations must evaluate their specific needs, considering factors such as complexity, security requirements, scalability needs, and resource availability, to determine the appropriate integration strategy. MCP is best suited to robust, complex applications within secure enterprise environments, whereas Function Calling excels in straightforward, dynamic task execution scenarios. Ultimately, the thoughtful alignment of these methodologies with organizational objectives ensures optimal utilization of AI resources, promoting efficiency and innovation.

Sources

- https://www.anthropic.com/news/model-context-protocol

- https://arxiv.org/pdf/2503.23278

- https://neon.tech/blog/mcp-vs-llm-function-calling

- https://www.runloop.ai/blog/function-calling-vs-model-context-protocol-mcp

- https://www.gentoro.com/blog/function-calling-vs-model-context-protocol-mcp

- https://dev.to/fotiecodes/function-calling-vs-model-context-protocol-mcp-what-you-need-to-know-4nbo

- https://www.reddit.com/r/ClaudeAI/comments/1h0w1z6/model_context_protocol_vs_function_calling_whats/

Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Model Context Protocol (MCP) vs Function Calling: A Deep Dive into AI Integration Architectures appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop