The domain of LLMs has rapidly evolved to include tools that empower these models to integrate external knowledge into their reasoning processes. A significant advancement in this direction is Retrieval-Augmented Generation (RAG), which allows models to query databases and search engines for up-to-date or niche information not embedded during training. RAG enhances performance in knowledge-intensive scenarios by integrating LLM generation with real-time information retrieval. Yet, as tasks become more complex, especially those needing multi-step reasoning or highly specific knowledge, ensuring that LLMs interact intelligently with these retrieval systems becomes critical. Improving this interaction process is crucial for enabling LLMs to address ambiguous, evolving, or complex information needs effectively.

A challenge in LLM-based systems that rely on retrieval mechanisms is the sensitivity to query quality. When an LLM generates an initial search query that fails to retrieve useful information, the system often lacks a robust strategy to recover from this failure. This leads to situations where the model either hallucinates an answer or terminates prematurely, yielding incorrect results. Current methods largely assume that a single good query will suffice, neglecting the scenario where persistence and retries are essential for uncovering the correct information. This limitation reduces the robustness of LLMs in complex tasks where understanding improves incrementally through trial, error, and refinement.

Various tools have been developed to enhance the interaction between LLMs and external retrieval systems. Techniques such as Process Reward Models (PRMs) and Process Explanation Models (PEMs) reward intermediate reasoning improvements, whereas DeepRetrieval employs reinforcement learning (RL) to optimize query formulation. These methods reward either the quality of reasoning or the final retrieval result. Iterative techniques, such as Self-Ask and IRCoT, enable multi-step reasoning by decomposing questions and retrieving information in an iterative manner. However, they lack mechanisms to reward models for persistence after a failed attempt. These systems generally do not encourage retrying or reformulating a failed query, which can be crucial for navigating ambiguous information landscapes.

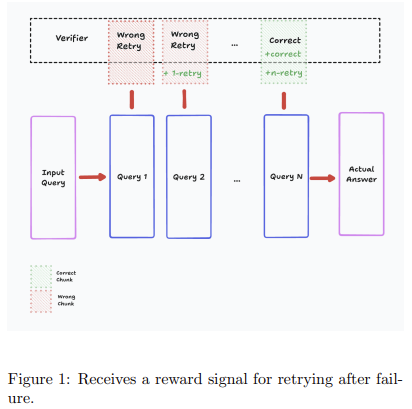

Researchers at Menlo Research introduced a new framework called ReZero (Retry-Zero). This method is designed specifically to teach large language models to persist in their information search by explicitly rewarding the act of retrying a query. Rather than only valuing the final answer, ReZero builds a learning environment where the model receives positive feedback when it recognizes a failed search and attempts again with a revised query. The reinforcement signal is applied during interactions with a search system, meaning that the model is rewarded not only for reaching the correct conclusion but also for demonstrating persistence along the way. The idea mirrors human behavior: when an initial search or strategy fails, a rational approach is to reformulate the plan and try again. ReZero operationalizes this idea by using a reward mechanism that reflects the value of retrying after encountering difficulty in information retrieval.

The team released two versions of their ReZero-trained model, Menlo/ReZero-v0.1-llama-3.2-3b-it-grpo-250404 and its GGUF variant, on Hugging Face. Both are fine-tuned on the Llama-3.2-3B-Instruct base using GRPO and optimized to reinforce retry behavior in search tasks. Trained on over 1,000 steps using Apollo Mission data on an H200 GPU, the model achieved a peak accuracy of 46.88% at step 250, validating the impact of the retry reward. The GGUF version is quantized for efficient deployment, showcasing ReZero’s potential for both research and real-world search applications.

ReZero utilizes a reinforcement learning method known as Group Relative Policy Optimization (GRPO) to train the model. This setup doesn’t rely on a separate critic model, streamlining the training process. The model is taught using a suite of reward functions: correctness of the final answer, adherence to format, retrieval of relevant content, and crucially, the presence of a retry when needed. These rewards work in combination. For instance, the retry reward only applies if a valid final answer is eventually produced, ensuring that models do not engage in endless retries without resolution. Also, a search diversity reward encourages the generation of semantically varied queries, while a search strategy reward assesses how effectively the model conducts sequential searches. Training is further enhanced by injecting noise into the search results, forcing the model to adapt to less-than-ideal conditions. This noise strengthens its generalization ability and simulates real-world imperfections.

The research team implemented ReZero using the Llama3-23B-Instruct model and evaluated it on the Apollo 3 mission dataset. This dataset was split into 341 data chunks, with 32 reserved for testing. Training lasted approximately 1,000 steps (equivalent to three epochs) and was performed on a single NVIDIA H200 GPU. Two model configurations were compared: a baseline with three reward functions (correctness, format, em chunk) and ReZero, which included an additional reward for retrying. The performance gap between the two was substantial. ReZero achieved a peak accuracy of 46.88% at 250 training steps, whereas the baseline reached its peak at only 25.00% at 350 steps. Also, ReZero demonstrated faster learning in early training stages. However, both models experienced a sharp decline in performance afterward, reaching 0% accuracy by step 450 (ReZero) and step 700 (Baseline). This performance drop suggests potential overfitting or instability in extended RL runs, indicating the need for refined training schedules or improved reward balancing.

Several Key Takeaways from the ReZero Framework:

- Designed to enhance LLM search capabilities by rewarding retry behavior after a failed information retrieval attempt.

- Based on reinforcement learning using Group Relative Policy Optimization (GRPO).

- Includes rewards for correctness, format, retry actions, relevant information match, search strategy, and query diversity.

- Rewards are only granted if retries result in a valid final answer, preventing excessive unproductive queries.

- ReZero utilized the Apollo 3 dataset, which consisted of 341 chunks; 32 were reserved for evaluation.

- It achieved a peak accuracy of 46.88% with a retry reward, compared to 25.00% without it.

- Conducted over 1000 steps on NVIDIA H200 GPU with the Llama3-23B-Instruct model.

- Both models experienced an accuracy collapse after reaching their respective peaks, indicating concerns about the stability of RL.

- Introduced the idea of persistence as a trainable behavior in RAG systems, distinct from simply refining single queries.

Here is the Paper and Model. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post LLMs Can Now Learn to Try Again: Researchers from Menlo Introduce ReZero, a Reinforcement Learning Framework That Rewards Query Retrying to Improve Search-Based Reasoning in RAG Systems appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop