Evaluating how well LLMs handle long contexts is essential, especially for retrieving specific, relevant information embedded in lengthy inputs. Many recent LLMs—such as Gemini-1.5, GPT-4, Claude-3.5, Qwen-2.5, and others—have pushed the boundaries of context length while striving to maintain strong reasoning abilities. To assess such capabilities, benchmarks like ∞Bench, LongBench, and L-Eval have been developed. However, these often overlook the “Needle-in-a-Haystack” (NIAH) task, which challenges models to retrieve a few critical pieces of information from predominantly irrelevant content. Earlier benchmarks, such as RULER and Counting-Stars, offered synthetic and simplistic NIAH setups, utilizing items like passwords or symbols. NeedleBench improved this by including more realistic, semantically meaningful needles and logical reasoning questions. Yet, it still lacks tasks involving the retrieval and correct ordering of sequential information, such as timestamps or procedural steps.

Efforts to enhance LLMs’ long-context capabilities have employed methods like RoPE, ALiBi, and memory-based techniques, along with architectural changes seen in models like Mamba and FLASHBUTTERFLY. Modern LLMs now support extensive contexts—Gemini 1.5 and Kimi can process up to 1–2 million tokens. NIAH benchmarks assess how effectively models can extract relevant data from vast amounts of text, and NeedleBench further incorporates logical relationships to simulate real-world scenarios. Regarding evaluation, natural language generation (NLG) performance is typically assessed using metrics derived from LLMs, prompt-based evaluations, fine-tuned models, or human-LLM collaborations. While prompting alone often underperforms, fine-tuning and human-in-the-loop methods can greatly enhance evaluation accuracy and reliability.

Researchers from Tencent YouTu Lab have introduced Sequential-NIAH, a benchmark designed to assess how well LLMs retrieve sequential information, referred to as a needle, from long texts. The benchmark includes synthetic, real, and open-domain QA needles embedded in contexts ranging from 8K to 128K tokens, totaling 14,000 samples. A synthetic data-trained evaluation model achieved 99.49% accuracy in judging the correctness and order of responses. However, tests on six popular LLMs showed the highest performance at just 63.15%, highlighting the difficulty of the task and the need for further advancement in long-context comprehension.

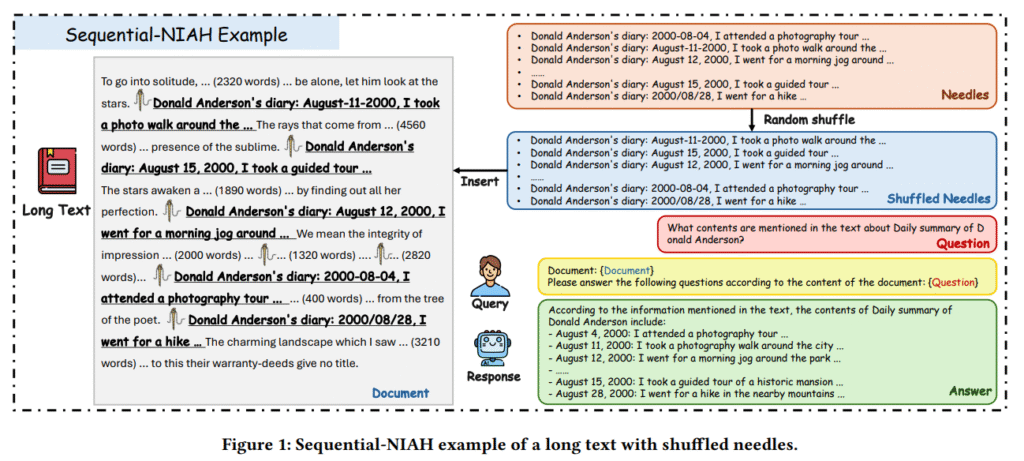

The Sequential-NIAH benchmark is designed to evaluate models on retrieving sequentially ordered information (needles) from long texts (haystacks). It uses three types of QA synthesis pipelines: synthetic (generated events in order), real (extracted from temporal knowledge graphs), and open-domain QA (logically ordered answers). These QA pairs are inserted into diverse, long texts sourced from the LongData Corpus, covering various domains. To construct samples, the long text is segmented, needles are randomly shuffled and embedded, and the task is framed using prompt templates. The final dataset comprises 14,000 samples, split across training, development, and test sets, in both English and Chinese.

The evaluation model was tested against Claude-3.5, GPT-4o, and others on 1,960 samples, achieving a 99.49% accuracy. This outperforms GPT-4o (96.07%) and Claude-3.5 (87.09%) by significant margins. In subsequent benchmark tests on 2,000 samples, Gemini-1.5 outperformed other models with an accuracy of 63.15%, while GPT-4o-mini and GPT-4o performed poorly. Performance varied with text length, number of needles, QA synthesis pipelines, and languages, with Gemini-1.5 maintaining stable results. A noise analysis revealed that minor perturbations had a negligible impact on accuracy, but larger shifts in needle positions reduced model consistency, particularly for Qwen-2.5 and LLaMA-3.3.

In conclusion, the Sequential-NIAH benchmark assesses LLMs on their ability to extract sequential information from lengthy texts (up to 128,000 tokens). It includes synthetic, real, and open-domain question-answering pipelines, with 14,000 samples for training, development, and testing. Despite testing popular models like Claude, GPT-4.0, Gemini, LLaMA, and Qwen, none achieved high accuracy, with the best performing at 63.15%. A synthetic evaluation model achieved an accuracy of 99.49% on the test data. The benchmark also highlights the challenges of increasing context lengths and needle counts and is validated through noise robustness tests, making it valuable for advancing LLM research.

Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Sequential-NIAH: A Benchmark for Evaluating LLMs in Extracting Sequential Information from Long Texts appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop