Transformers have revolutionized sequence modeling by introducing an architecture that handles long-range dependencies efficiently without relying on recurrence. Their ability to process input tokens simultaneously, while utilizing self-attention mechanisms, enables them to achieve impressive performance in natural language tasks. However, despite their dominance, some of the essential features found in recurrent neural networks, particularly the ability to forget irrelevant past information, are not natively present in standard Transformer models. This has led researchers to explore hybrid approaches that combine the best aspects of both architectures. The growing body of work on linear attention and gated recurrent designs has prompted interest in how such mechanisms can be meaningfully integrated into the Transformer paradigm to enhance its adaptability and precision in processing context-sensitive sequences.

A key challenge in sequential modeling is dynamically controlling memory. Standard attention-based models, such as the Transformer, process and store all input information uniformly, regardless of its relevance over time. This approach can be suboptimal when recent inputs carry more significance for a task, or when older inputs introduce noise. Traditional recurrent models address this with mechanisms such as forget gates, which allow them to modulate memory retention. However, these models struggle to maintain performance over extended sequences because of their fixed-size hidden states. The Transformer, while powerful, lacks a native method for discarding less useful past information in a context-sensitive manner. As a result, tasks that demand selective memory can suffer, especially when input lengths grow substantially and noise accumulates.

To address memory challenges, some strategies have introduced static positional biases into attention mechanisms. For instance, ALiBi adds predefined slopes to attention logits to simulate a form of recency weighting. However, such methods lack adaptability, as they do not consider the content of the input when deciding what to retain. Other efforts, such as Mamba-2 and GLA, implement gating within linear attention frameworks but often sacrifice normalization, a key aspect of Transformer accuracy. Also, these models tend to deviate significantly from the Transformer structure, making them less compatible with Transformer-based optimizations and pretraining paradigms. Thus, a gap remains for an approach that can dynamically forget in a learnable and efficient manner while preserving the Transformer’s computational strengths.

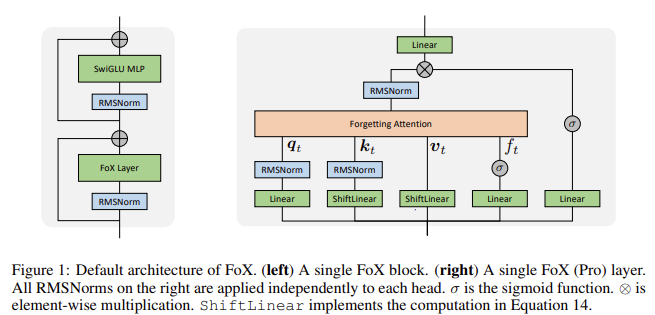

Researchers from Mila & Universite de Montreal and MakerMaker AI proposed a novel architecture called the Forgetting Transformer (FoX). This model introduces a mechanism known as Forgetting Attention, which inserts a scalar forget gate into the softmax attention process. Unlike existing recurrent models, this modification is fully compatible with parallel computation and avoids the need for positional embeddings. The forget gate adjusts the raw attention scores based on the data itself, allowing FoX to effectively down-weight less relevant past inputs. Importantly, the model retains full compatibility with the efficient FlashAttention algorithm, ensuring minimal deployment overhead. Two architectural variants were tested: FoX, based on LLaMA, and FoX (Pro), which incorporates normalization techniques and token-shifting mechanisms derived from recent recurrent models.

Technically, the model computes forget gate values for each timestep using a sigmoid activation on a learned linear transformation of the input. These scalar gate values are then used to bias attention logits through a log-sum formulation, modifying the softmax operation in a hardware-efficient manner. The modification is implemented by computing the cumulative sum of log forget values and adjusting attention weights without requiring the instantiation of large matrices. Multi-head attention support is retained, with each head maintaining independent forget gate parameters. The Pro variant introduces output normalization and output gates, along with a key-value shift mechanism that mixes current and previous tokens in a learnable manner. These adjustments further refine context sensitivity and model flexibility without significantly increasing the number of parameters.

In a long-context language modeling task using the LongCrawl64 dataset (a 48-billion-token subset of RedPajama-v2), FoX consistently surpassed both standard Transformer baselines and leading recurrent models. Per-token loss metrics showed a sharper decline for FoX across token positions, indicating better context utilization. At position 64,000, FoX (Pro) achieved significantly lower loss values than Transformer (Pro) and LLaMA variants. Also, perplexity evaluations demonstrated that FoX maintains robust accuracy across increasing validation context lengths, with performance degrading less sharply beyond the training limit of 16,384 tokens. Competing models, such as Mamba-2 and DeltaNet, showed earlier plateaus, highlighting FoX’s superior extrapolation capabilities. Training was performed with 760 million parameters using the TikToken tokenizer for GPT-2, with extensive tuning for learning rates and head dimensions. Fox preferred higher learning rates and smaller head dimensions, indicating architectural resilience and adaptability.

The researchers emphasized that Forgetting Attention retains the core benefits of the Transformer while overcoming its limitations regarding selective memory. They demonstrated that the forget gate introduces a data-driven recency bias that strengthens performance in both short and long sequences. Additionally, the implementation incurs minimal computational cost and requires no additional memory overhead, thanks to its compatibility with FlashAttention. Notably, Forgetting Attention also generalizes static biases, such as ALiBi, by introducing learnable gates, providing evidence that dynamic biasing is significantly more effective. FoX models also matched or exceeded standard Transformer performance on downstream tasks, with the Pro variant showing consistent superiority, especially in functions that reward adaptability across contexts.

This work demonstrates that the effective integration of dynamic memory mechanisms into Transformer architectures is not only feasible but also beneficial across a wide range of benchmarks. The introduction of a forget gate within the attention computation allows models to discard irrelevant information in a learned manner, substantially improving focus and generalization. The compatibility with high-performance implementations, such as FlashAttention, ensures that such improvements come without trade-offs in efficiency.

Several Key takeaways from the research on FoX include:

- FoX introduces Forgetting Attention, enhancing standard softmax attention with learnable forget gates.

- Two architectural variants were tested: FoX (LLaMA) and FoX (Pro), with the latter incorporating additional normalization and gating layers.

- FoX models trained on 48B tokens with 760M parameters significantly outperformed Transformers in long-context modeling.

- Per-token loss L(i) and perplexity P(l) confirmed that FoX maintained low error rates even beyond 64k-token sequences.

- Forgetting Attention is a generalization of ALiBi, offering dynamic, data-dependent gating over fixed biases.

- The Pro architecture further improved results with minimal overhead by using output normalization and token shift mechanisms.

- Hardware compatibility was preserved through modifications to FlashAttention, enabling practical deployment at scale.

Check out the Paper and Code. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Mila & Universite de Montreal Researchers Introduce the Forgetting Transformer (FoX) to Boost Long-Context Language Modeling without Sacrificing Efficiency appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop