Large language models (LLMs) have gained significant traction in reasoning tasks, including mathematics, logic, planning, and coding. However, a critical challenge emerges when applying these models to real-world scenarios. While current implementations typically operate under the assumption that all necessary information is provided upfront in well-specified tasks, reality often presents incomplete or ambiguous situations. Users frequently omit crucial details when formulating math problems, and autonomous systems like robots must function in environments with partial observability. This fundamental mismatch between idealised complete-information settings and the incomplete nature of real-world problems necessitates LLMs to develop proactive information-gathering capabilities. Recognising information gaps and generating relevant clarifying questions represents an essential but underdeveloped functionality for LLMs to effectively navigate ambiguous scenarios and provide accurate solutions in practical applications.

Various approaches have attempted to address the challenge of information gathering in ambiguous scenarios. Active learning strategies acquire sequential data through methods like Bayesian optimisation, reinforcement learning, and robot planning with partially observable states. Research on ambiguity in natural language has explored semantic uncertainties, factual question-answering, task-oriented dialogues, and personalised preferences. Question-asking methods for LLMs include direct prompting techniques, information gain computation, and multi-stage clarification frameworks. However, most existing benchmarks focus on subjective tasks where multiple valid clarifying questions exist, making objective evaluation difficult. These approaches address ambiguous or knowledge-based tasks rather than underspecified reasoning problems, where an objectively correct question is determinable.

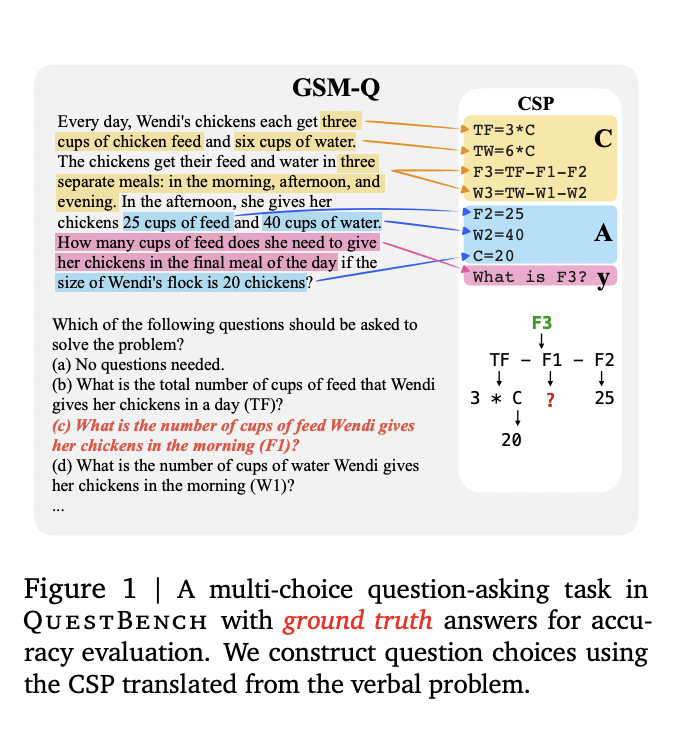

QuestBench presents a robust approach to evaluating LLMs’ ability to identify and acquire missing information in reasoning tasks. The methodology formalises underspecified problems as Constraint Satisfaction Problems (CSPs) where a target variable cannot be determined without additional information. Unlike semantic ambiguity, where multiple interpretations exist but each yields a solvable answer, underspecification renders problems unsolvable without supplementary data. QuestBench specifically focuses on “1-sufficient CSPs” – problems requiring knowledge of just one unknown variable’s value to solve for the target variable. The benchmark comprises three distinct domains: Logic-Q (logical reasoning tasks), Planning-Q (blocks world planning problems with partially observed initial states), and GSM-Q/GSME-Q (grade-school math problems in verbal and equation forms). The framework strategically categorises problems along four axes of difficulty: number of variables, number of constraints, search depth required, and expected guesses needed by brute-force search. This classification offers insights into LLMs’ reasoning strategies and performance limitations.

QuestBench employs a formal Constraint Satisfaction Problem framework, precisely identify and evaluate information gaps in reasoning tasks. A CSP is defined as a tuple ⟨X, D, C, A, y⟩ where X represents variables, D denotes their domains, C encompasses constraints, A consists of variable assignments, and y is the target variable to solve. The framework introduces the “Known” predicate, indicating when a variable’s value is determinable either through direct assignment or derivation from existing constraints. A CSP is classified as underspecified when the target variable y cannot be determined from available information. The methodology focuses specifically on “1-sufficient CSPs”, where knowing just one additional variable is sufficient to solve for the target.

The benchmark measures model performance along four difficulty axes that correspond to algorithmic complexity: total number of variables (|X|), total number of constraints (|C|), depth of backwards search tree (d), and expected number of random guesses needed (𝔼BF). These metrics provide quantitative measures of problem complexity and help differentiate between semantic ambiguity (multiple valid interpretations) and underspecification (missing information). For each task, models must identify the single sufficient variable that, when known, enables solving for the target variable, requiring both recognition of information gaps and strategic reasoning about constraint relationships.

Experimental evaluation of QuestBench reveals varying capabilities among leading large language models in information-gathering tasks. GPT-4o, GPT-4-o1 Preview, Claude 3.5 Sonnet, Gemini 1.5 Pro/Flash, Gemini 2.0 Flash Thinking Experimental, and open-sourced Gemma models were tested across zero-shot, chain-of-thought, and four-shot settings. Tests were conducted on representative subsets of 288 GSM-Q and 151 GSME-Q tasks between June 2024 and March 2025. Performance analysis along the difficulty axes demonstrates that models struggle most with problems featuring high search depths and complex constraint relationships. Chain-of-thought prompting generally improved performance across all models, suggesting that explicit reasoning pathways help identify information gaps. Among the evaluated models, Gemini 2.0 Flash Thinking Experimental achieved the highest accuracy, particularly on planning tasks, while open-source models showed competitive performance on logical reasoning tasks but struggled with complex math problems requiring deeper search.

QuestBench provides a unique framework for evaluating LLMs’ ability to identify underspecified information and generate appropriate clarifying questions in reasoning tasks. Current state-of-the-art models demonstrate reasonable performance on simple algebra problems but struggle significantly with complex logic and planning tasks. Performance deteriorates as problem complexity increases along key dimensions like search depth and expected number of brute-force guesses. These findings highlight that while reasoning ability is necessary for effective question-asking, it alone may not be sufficient. Significant advancement opportunities exist in developing LLMs that can better recognize information gaps and request clarification when operating under uncertainty.

Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Google DeepMind Research Introduces QuestBench: Evaluating LLMs’ Ability to Identify Missing Information in Reasoning Tasks appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop