We are excited to announce the availability of new features in AWS Database Migration Service (AWS DMS) replication engine version 3.5.4. This release includes two major enhancements: data masking for enhanced security and improved data validation performance.

In this post, we deep dive into these two features. Refer to the release notes to see a list of all the new features available in this version.

Data masking for enhanced security

A data masking capability was requested by our customers to enhance data security during migrations, enabling you to transform sensitive data at the column level during migration and helping you comply with data protection regulations like GDPR. With AWS DMS, you can now create copies of data that redacts information that you need to protect at a column level.

One of the biggest concerns for our customers during database migrations is the secure handling of sensitive information, such as account numbers, phone numbers, and email addresses. With AWS DMS 3.5.4, we have implemented three flexible data transformation rules:

- Digits Mask

- Digits Randomize

- Hashing Mask

To illustrate these transformation rules, we migrate a table called “EMPLOYEES” from an Amazon RDS for Oracle instance to Amazon RDS for PostgreSQL instance. Complete the following steps:

- Use the following table DDL on your source (Oracle) instance to create the EMPLOYEES table:

- Insert the table EMPLOYEES with a few records.

- Create an AWS DMS task using the option “Migrate” or “Migrate and replicate”.

- Set up the AWS DMS task with the following table mapping rule JSON. We mask the column

ACCOUNT_NUMBERwith the character#, the columnPHONE_NUMBERwith random numbers, and the columnEMAILwith a hash. We have also used transformation rules to convert everything in lowercase which is optional.

In the following sample output, we can see the output on the PostgreSQL instance with the data masking:

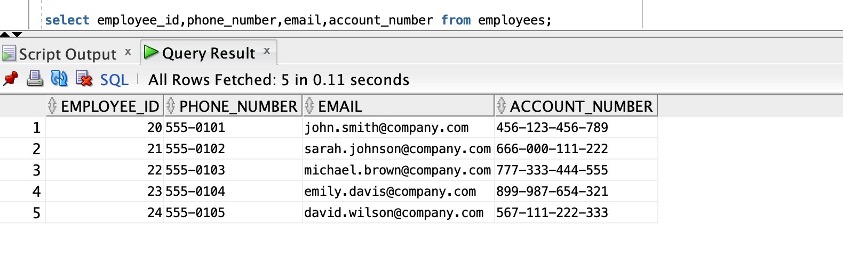

The following image shows the sample output on Oracle for comparison.

In the preceding example, we have shown how you can use the data masking capability to hide sensitive information. Refer to Using data masking to hide sensitive information for further information.

Enhanced data validation performance

Maintaining data integrity is crucial during any database migration, but it can often be a time-consuming and resource-intensive process. AWS DMS 3.5.4 addresses this challenge with the enhanced data validation feature, which uses innovative techniques like fast partition validation to streamline the validation process.

Some of the key benefits of enhanced data validation are:

- Redistribution of resource usage from a replication instance to the AWS DMS source and target endpoint

- Potential decrease of network usage

- Efficient for wide tables without LOB data types

The enhanced data validation feature is now available for specific AWS DMS migration paths, including Oracle to PostgreSQL, SQL Server to PostgreSQL, Oracle to Oracle, and SQL Server to SQL Server. To use this feature, make sure your environment meets the prerequisites.

You can confirm AWS DMS is using enhanced data validation by reviewing the Amazon CloudWatch logs, which will show messages like the following:

To quantify the performance improvements, we conducted benchmarking using HammerDB with the settings as shown in the following screenshot.

We created a full load and change data capture (CDC) task with validation disabled to migrate approximately 93 million records (15 GB in size) from an Amazon Relational Database Service (Amazon RDS) for SQL Server to Amazon Aurora PostgreSQL-Compatible Edition across a total of nine tables as a baseline.

We then ran two validation-only tasks: one on AWS DMS 3.5.3 and one on AWS DMS 3.5.4 using r6i.xlarge instances. To speed up validation, we increased PartitionSize to 100,000 and ThreadCount to 15:

The following screenshots show resource consumption on the AWS DMS replication instance running on engine version 3.5.4.

The following screenshots show resource consumption on the AWS DMS replication instance running running on engine version 3.5.3.

We can see a 91% reduction in TaskMemoryUsage of a validation-only task when run on AWS DMS 3.5.4 as compared to AWS DMS 3.5.3, and a 95% reduction in the CPU utilization of the underlying AWS DMS replication instance. For customers who want to run a separate validation-only task, you can use this feature and use the compute and memory of the AWS DMS replication instance in a more resourceful manner.

Conclusion

In this post, we discussed the transformation rules for data masking and enhanced data validation in AWS DMS 3.5.4. By implementing data masking features, you can ensure sensitive information remains protected throughout your database migration journey. With enhanced data validation feature, you have all the benefits of running validation with less resource consumption on DMS replication instance. Try these features and let us know how it helped your use case in the comments section.

About the authors

Suchindranath Hegde is a Senior Data Migration Specialist Solutions Architect at Amazon Web Services. He works with our customers to provide guidance and technical assistance on data migration to the AWS Cloud using AWS DMS.

Suchindranath Hegde is a Senior Data Migration Specialist Solutions Architect at Amazon Web Services. He works with our customers to provide guidance and technical assistance on data migration to the AWS Cloud using AWS DMS.

Mahesh Kansara is a database engineering manager at Amazon Web Services. He closely works with development and engineering teams to improve the migration and replication service. He also works with our customers to provide guidance and technical assistance on various database and analytical projects, helping them improving the value of their solutions when using AWS.

Mahesh Kansara is a database engineering manager at Amazon Web Services. He closely works with development and engineering teams to improve the migration and replication service. He also works with our customers to provide guidance and technical assistance on various database and analytical projects, helping them improving the value of their solutions when using AWS.

Leonid Slepukhin is a Senior Database Engineer with the Database Migration Service (DMS) team at Amazon Web Services. He works on developing core features for AWS DMS and specialize in helping both internal and external customers resolve complex database migration and replication challenges. His focus areas include enhancing DMS capabilities and providing technical expertise to ensure successful database migrations to AWS cloud.

Leonid Slepukhin is a Senior Database Engineer with the Database Migration Service (DMS) team at Amazon Web Services. He works on developing core features for AWS DMS and specialize in helping both internal and external customers resolve complex database migration and replication challenges. His focus areas include enhancing DMS capabilities and providing technical expertise to ensure successful database migrations to AWS cloud.

Sridhar Ramasubramanian is a database engineer with the AWS Database Migration Service team. He works on improving the DMS service to better suit the needs of AWS customers.

Sridhar Ramasubramanian is a database engineer with the AWS Database Migration Service team. He works on improving the DMS service to better suit the needs of AWS customers.

Source: Read More