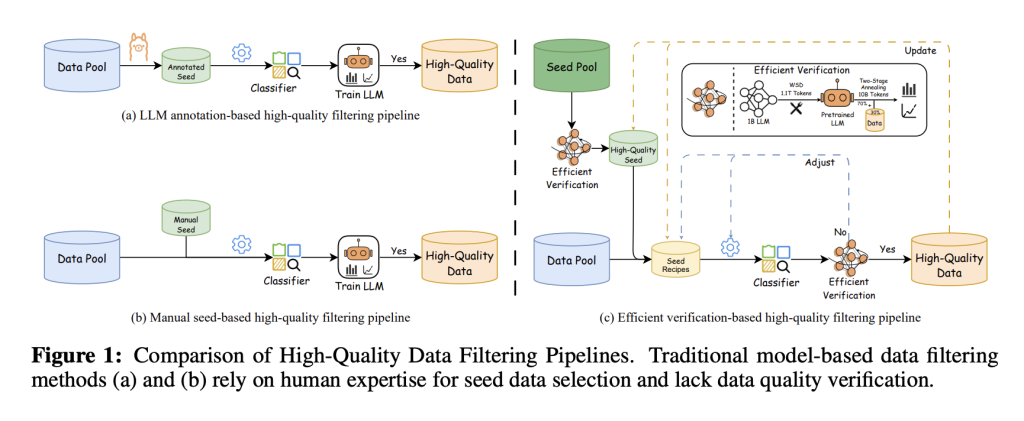

The data quality used in pretraining LLMs has become increasingly critical to their success. To build information-rich corpora, researchers have moved from heuristic filtering methods, such as rule-based noise removal and deduplication, to model-driven filtering, which leverages neural classifiers to identify high-quality samples. Despite its benefits, this approach still faces key issues: it lacks efficient validation mechanisms to assess data quality promptly and often relies on manually curated seed datasets that introduce subjectivity. While early datasets like C4 and Pile laid the groundwork for model development, recent efforts like RefinedWeb, Dolma, and DCLM have scaled significantly, incorporating up to trillions of tokens. Model-driven filtering has gained traction in these newer corpora for its ability to refine massive datasets and enhance LLM performance across downstream tasks.

Nevertheless, the effectiveness of model-driven filtering is limited by the high costs and inefficiencies of current validation methods and the absence of clear standards for seed data selection. Recent datasets, such as FineWeb-edu and Ultra-FineWeb, have demonstrated improved model performance by using multiple classifiers to cross-verify data quality. These datasets outperform previous versions on benchmarks like MMLU, ARC, and C-Eval, indicating that refined filtering methods can enhance English and Chinese understanding. To further optimize this process, some studies propose using LLMs for multi-dimensional data evaluation via prompts or leveraging token-level perplexity scores. These innovations aim to lower computational overhead while improving data quality, ultimately enabling more effective training with fewer tokens.

Researchers from ModelBest Inc., Tsinghua University, and Soochow University developed an efficient data filtering pipeline to improve LLM training. They introduced a verification strategy that uses a nearly-trained LLM to evaluate new data by observing performance gains during final training steps, reducing computational costs. A lightweight fastText-based classifier further enhances filtering speed and accuracy. Applied to FineWeb and Chinese FineWeb datasets, this method produced the Ultra-FineWeb dataset, containing 1 trillion English and 120 billion Chinese tokens. LLMs trained on Ultra-FineWeb showed notable performance gains, confirming the pipeline’s effectiveness in improving data quality and training efficiency.

The study outlines an efficient, high-quality data filtering pipeline to reduce computational costs while maintaining data integrity. It begins by using a cost-effective verification strategy to select reliable seed samples from a candidate pool, which are then used to train a data classifier. Positive seeds are sourced from LLM annotations, curated datasets, textbooks, and synthesized content, while negatives come from diverse corpora. Classifier training avoids over-iteration, focusing instead on high-quality seed selection. A fastText-based classifier is used for scalable filtering, offering competitive performance at significantly lower inference costs compared to LLM-based methods, with preprocessing steps ensuring balanced, clean data input.

The models were trained using MegatronLM with the MiniCPM-1.2 B architecture on 100B tokens. Evaluations used Lighteval across English and Chinese benchmarks. The results show that models trained on Ultra-FineWeb consistently outperformed those trained on FineWeb and FineWeb-edu, individually and in mixed-language settings. Ultra-FineWeb-en achieved the highest English average score, while Ultra-FineWeb-zh improved performance on Chinese tasks. Ablation studies revealed that Ultra-FineWeb maintains balanced token lengths and benefits from efficient filtering strategies, highlighting its superior quality and effectiveness in improving model performance.

In conclusion, the study presents Ultra-FineWeb, a high-quality multilingual dataset comprising about 1 trillion English tokens and 120 billion Chinese tokens. Built upon FineWeb and Chinese FineWeb, it leverages a novel, efficient data filtering pipeline featuring a fastText-based lightweight classifier and a low-cost verification strategy. The pipeline enhances filtering accuracy, reduces reliance on manual seed data selection, and ensures robust performance with minimal computational overhead. Experimental results show that models trained on Ultra-FineWeb consistently outperform those trained on earlier datasets, demonstrating improved performance across benchmarks. The methodology ensures reproducibility and offers valuable insights for optimizing data quality in future LLM training.

Check out the Paper and Dataset. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit.

The post Researchers from Tsinghua and ModelBest Release Ultra-FineWeb: A Trillion-Token Dataset Enhancing LLM Accuracy Across Benchmarks appeared first on MarkTechPost.

Source: Read MoreÂ