Reasoning tasks are a fundamental aspect of artificial intelligence, encompassing areas like commonsense understanding, mathematical problem-solving, and symbolic reasoning. These tasks often involve multiple steps of logical inference, which large language models (LLMs) attempt to mimic through structured approaches such as chain-of-thought (CoT) prompting. However, as LLMs grow in size and complexity, they tend to produce longer outputs across all tasks, regardless of difficulty, leading to significant inefficiencies. The field has been striving to balance the depth of reasoning with computational cost while also ensuring that models can adapt their reasoning strategies to meet the unique needs of each problem.

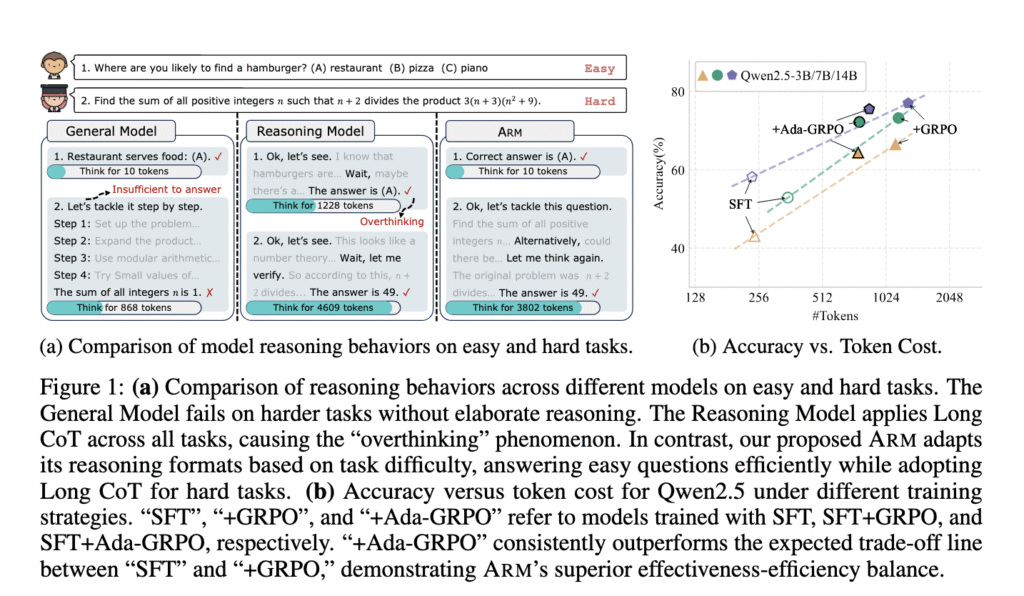

A key issue with current reasoning models is the inability to tailor the reasoning process to different task complexities. Most models, including well-known ones like OpenAI’s o1 and DeepSeek-R1, apply a uniform strategy—typically relying on Long CoT across all tasks. This causes the “overthinking” problem, where models generate unnecessarily verbose explanations for simpler tasks. Not only does this waste resources, but it also degrades accuracy, as excessive reasoning can introduce irrelevant information. Approaches such as prompt-guided generation or token budget estimation have attempted to mitigate this issue. Still, these methods are limited by their dependence on predefined assumptions, which are not always reliable for diverse tasks.

Attempts to address these issues include methods like GRPO (Group Relative Policy Optimization), length-penalty mechanisms, and rule-based prompt controls. While GRPO enables models to learn different reasoning strategies by rewarding correct answers, it leads to a “format collapse,” where models increasingly rely on Long CoT, crowding out more efficient formats, such as Short CoT or Direct Answer. Length-penalty techniques, such as those applied in methods like THINKPRUNE, control output length during training or inference, but often at the cost of reduced accuracy, especially in complex problem-solving tasks. These solutions struggle to achieve a consistent trade-off between reasoning effectiveness and efficiency, highlighting the need for an adaptive approach.

A team of researchers from Fudan University and Ohio State University introduced the Adaptive Reasoning Model (ARM), which dynamically adjusts reasoning formats based on task difficulty. ARM supports four distinct reasoning styles: Direct Answer for simple tasks, Short CoT for concise reasoning, Code for structured problem-solving, and Long CoT for deep multi-step reasoning. It operates in an Adaptive Mode by default, automatically selecting the appropriate format, and also provides Instruction-Guided and Consensus-Guided Modes for explicit control or aggregation across formats. The key innovation lies in its training process, which utilizes Ada-GRPO, an extension of GRPO that introduces a format diversity reward mechanism. This prevents the dominance of Long CoT and ensures that ARM continues to explore and use simpler reasoning formats when appropriate.

The ARM methodology is built on a two-stage framework. First, the model undergoes Supervised Fine-Tuning (SFT) with 10.8K questions, each annotated across four reasoning formats, sourced from datasets like AQuA-Rat and generated with tools such as GPT-4o and DeepSeek-R1. This stage teaches the model the structure of each reasoning format but does not instill adaptiveness. The second stage applies Ada-GRPO, where the model receives scaled rewards for using less frequent formats, such as Direct Answer or Short CoT. A decaying factor ensures that this reward gradually shifts back to accuracy as training progresses, preventing long-term bias toward inefficient exploration. This structure enables ARM to avoid format collapse and dynamically match reasoning strategies to task difficulty, achieving a balance of efficiency and performance.

ARM demonstrated impressive results across various benchmarks, including commonsense, mathematical, and symbolic reasoning tasks. It reduced token usage by an average of 30%, with reductions as high as 70% for simpler tasks, compared to models relying solely on Long CoT. ARM achieved a 2x training speedup over GRPO-based models, accelerating model development without sacrificing accuracy. For example, ARM-7B achieved 75.9% accuracy on the challenging AIME’25 task while using 32.5% fewer tokens. ARM-14B achieved 85.6% accuracy on OpenBookQA and 86.4% accuracy on the MATH dataset, with a token usage reduction of over 30% compared to Qwen2.5SFT+GRPO models. These numbers demonstrate ARM’s ability to maintain competitive performance while delivering significant efficiency gains.

Overall, the Adaptive Reasoning Model addresses the persistent inefficiency of reasoning models by enabling the adaptive selection of reasoning formats based on task difficulty. The introduction of Ada-GRPO and the multi-format training framework ensures that models no longer waste resources on overthinking. Instead, ARM provides a flexible and practical solution for balancing accuracy and computational cost in reasoning tasks, making it a promising approach for scalable and efficient large language models.

Check out the Paper, Models on Hugging Face and Project Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post This AI Paper Introduces ARM and Ada-GRPO: Adaptive Reasoning Models for Efficient and Scalable Problem-Solving appeared first on MarkTechPost.

Source: Read MoreÂ