The Shift in Agentic AI System Needs

LLMs are widely admired for their human-like capabilities and conversational skills. However, with the rapid growth of agentic AI systems, LLMs are increasingly being utilized for repetitive, specialized tasks. This shift is gaining momentum—over half of major IT companies now use AI agents, with significant funding and projected market growth. These agents rely on LLMs for decision-making, planning, and task execution, typically through centralized cloud APIs. Massive investments in LLM infrastructure reflect confidence that this model will remain foundational to AI’s future.

SLMs: Efficiency, Suitability, and the Case Against Over-Reliance on LLMs

Researchers from NVIDIA and Georgia Tech argue that small language models (SLMs) are not only powerful enough for many agent tasks but also more efficient and cost-effective than large models. They believe SLMs are better suited for the repetitive and simple nature of most agentic operations. While large models remain essential for more general, conversational needs, they propose using a mix of models depending on task complexity. They challenge the current reliance on LLMs in agentic systems and offer a framework for transitioning from LLMs to SLMs. They invite open discussion to encourage more resource-conscious AI deployment.

Why SLMs are Sufficient for Agentic Operations

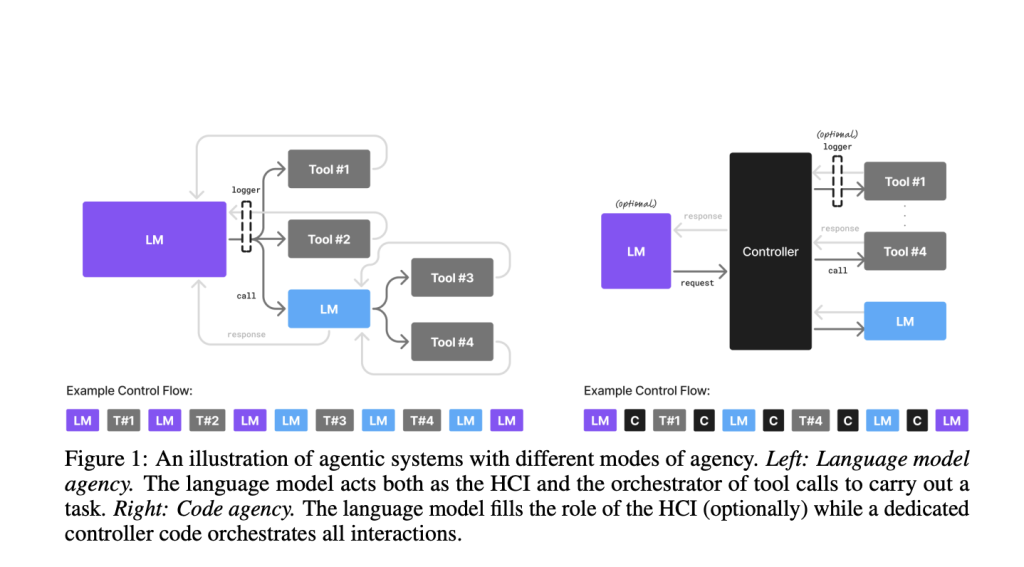

The researchers argue that SLMs are not only capable of handling most tasks within AI agents but are also more practical and cost-effective than LLMs. They define SLMs as models that can run efficiently on consumer devices, highlighting their strengths—lower latency, reduced energy consumption, and easier customization. Since many agent tasks are repetitive and focused, SLMs are often sufficient and even preferable. The paper suggests a shift toward modular agentic systems using SLMs by default and LLMs only when necessary, promoting a more sustainable, flexible, and inclusive approach to building intelligent systems.

Arguments for LLM Dominance

Some argue that LLMs will always outperform small models (SLMs) in general language tasks due to superior scaling and semantic abilities. Others claim centralized LLM inference is more cost-efficient due to economies of scale. There is also a belief that LLMs dominate simply because they had an early start, drawing the majority of the industry’s attention. However, the study counters that SLMs are highly adaptable, cheaper to run, and can handle well-defined subtasks in agent systems effectively. Still, the broader adoption of SLMs faces hurdles, including existing infrastructure investments, evaluation bias toward LLM benchmarks, and lower public awareness.

Framework for Transitioning from LLMs to SLMs

To smoothly shift from LLMs to smaller, specialized ones (SLMs) in agent-based systems, the process starts by securely collecting usage data while ensuring privacy. Next, the data is cleaned and filtered to remove sensitive details. Using clustering, common tasks are grouped to identify where SLMs can take over. Based on task needs, suitable SLMs are chosen and fine-tuned with tailored datasets, often utilizing efficient techniques such as LoRA. In some cases, LLM outputs guide SLM training. This isn’t a one-time process—models should be regularly updated and refined to stay aligned with evolving user interactions and tasks.

Conclusion: Toward Sustainable and Resource-Efficient Agentic AI

In conclusion, the researchers believe that shifting from large to SLMs could significantly improve the efficiency and sustainability of agentic AI systems, especially for tasks that are repetitive and narrowly focused. They argue that SLMs are often powerful enough, more cost-effective, and better suited for such roles compared to general-purpose LLMs. In cases requiring broader conversational abilities, using a mix of models is recommended. To encourage progress and open dialogue, they invite feedback and contributions to their stance, committing to share responses publicly. The goal is to inspire more thoughtful and resource-efficient use of AI technologies in the future.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

The post Why Small Language Models (SLMs) Are Poised to Redefine Agentic AI: Efficiency, Cost, and Practical Deployment appeared first on MarkTechPost.

Source: Read MoreÂ