In this post, we explore how generative AI can revolutionize threat modeling practices by automating vulnerability identification, generating comprehensive attack scenarios, and providing contextual mitigation strategies. Unlike previous automation attempts that struggled with the creative and contextual aspects of threat analysis, generative AI overcomes these limitations through its ability to understand complex system relationships, reason about novel attack vectors, and adapt to unique architectural patterns. Where traditional automation tools relied on rigid rule sets and predefined templates, AI models can now interpret nuanced system designs, infer security implications across components, and generate threat scenarios that human analysts might overlook, making effective automated threat modeling a practical reality.

Threat modeling and why it matters

Threat modeling is a structured approach to identifying, quantifying, and addressing security risks associated with an application or system. It involves analyzing the architecture from an attacker’s perspective to discover potential vulnerabilities, determine their impact, and implement appropriate mitigations. Effective threat modeling examines data flows, trust boundaries, and potential attack vectors to create a comprehensive security strategy tailored to the specific system.

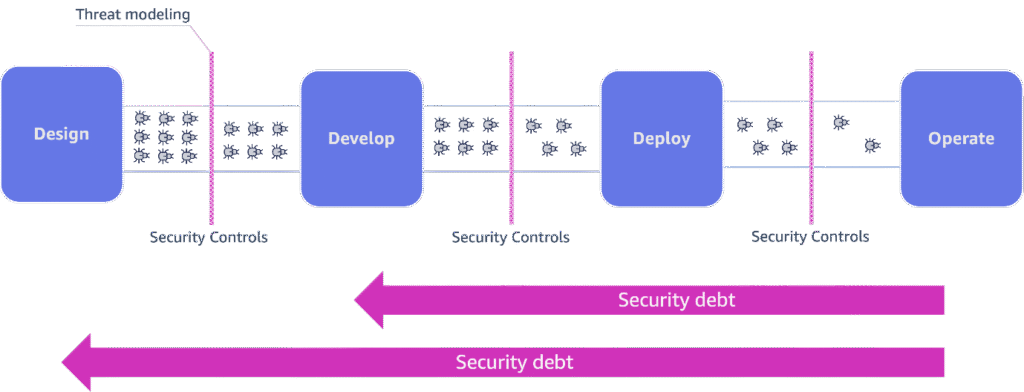

In a shift-left approach to security, threat modeling serves as a critical early intervention. By implementing threat modeling during the design phase—before a single line of code is written—organizations can identify and address potential vulnerabilities at their inception point. The following diagram illustrates this workflow.

This proactive strategy significantly reduces the accumulation of security debt and transforms security from a bottleneck into an enabler of innovation. When security considerations are integrated from the beginning, teams can implement appropriate controls throughout the development lifecycle, resulting in more resilient systems built from the ground up.

Despite these clear benefits, threat modeling remains underutilized in the software development industry. This limited adoption stems from several significant challenges inherent to traditional threat modeling approaches:

- Time requirements – The process takes 1–8 days to complete, with multiple iterations needed for full coverage. This conflicts with tight development timelines in modern software environments.

- Inconsistent assessment – Threat modeling suffers from subjectivity. Security experts often vary in their threat identification and risk level assignments, creating inconsistencies across projects and teams.

- Scaling limitations – Manual threat modeling can’t effectively address modern system complexity. The growth of microservices, cloud deployments, and system dependencies outpaces security teams’ capacity to identify vulnerabilities.

How generative AI can help

Generative AI has revolutionized threat modeling by automating traditionally complex analytical tasks that required human judgment, reasoning, and expertise. Generative AI brings powerful capabilities to threat modeling, combining natural language processing with visual analysis to simultaneously evaluate system architectures, diagrams, and documentation. Drawing from extensive security databases like MITRE ATT&CK and OWASP, these models can quickly identify potential vulnerabilities across complex systems. This dual capability of processing both text and visuals while referencing comprehensive security frameworks enables faster, more thorough threat assessments than traditional manual methods.

Our solution, Threat Designer, uses enterprise-grade foundation models (FMs) available in Amazon Bedrock to transform threat modeling. Using Anthropic’s Claude Sonnet 3.7 advanced multimodal capabilities, we create comprehensive threat assessments at scale. You can also use other available models from the model catalog or use your own fine-tuned model, giving you maximum flexibility to use pre-trained expertise or custom-tailored capabilities specific to your security domain and organizational requirements. This adaptability makes sure your threat modeling solution delivers precise insights aligned with your unique security posture.

Solution overview

Threat Designer is a user-friendly web application that makes advanced threat modeling accessible to development and security teams. Threat Designer uses large language models (LLMs) to streamline the threat modeling process and identify vulnerabilities with minimal human effort.

Key features include:

- Architecture diagram analysis – Users can submit system architecture diagrams, which the application processes using multimodal AI capabilities to understand system components and relationships

- Interactive threat catalog – The system generates a comprehensive catalog of potential threats that users can explore, filter, and refine through an intuitive interface

- Iterative refinement – With the replay functionality, teams can rerun the threat modeling process with design improvements or modifications, and see how changes impact the system’s security posture

- Standardized exports – Results can be exported in PDF or DOCX formats, facilitating integration with existing security documentation and compliance processes

- Serverless architecture – The solution runs on a cloud-based serverless infrastructure, alleviating the need for dedicated servers and providing automatic scaling based on demand

The following diagram illustrates the Threat Designer architecture.

The solution is built on a serverless stack, using AWS managed services for automatic scaling, high availability, and cost-efficiency. The solution is composed of the following core components:

- Frontend – AWS Amplify hosts a ReactJS application built with the Cloudscape design system, providing the UI

- Authentication – Amazon Cognito manages the user pool, handling authentication flows and securing access to application resources

- API layer – Amazon API Gateway serves as the communication hub, providing proxy integration between frontend and backend services with request routing and authorization

- Data storage – We use the following services for storage:

- Two Amazon DynamoDB tables:

- The agent execution state table maintains processing state

- The threat catalog table stores identified threats and vulnerabilities

- An Amazon Simple Storage Service (Amazon S3) architecture bucket stores system diagrams and artifacts

- Two Amazon DynamoDB tables:

- Generative AI – Amazon Bedrock provides the FM for threat modeling, analyzing architecture diagrams and identifying potential vulnerabilities

- Backend service – An AWS Lambda function contains the REST interface business logic, built using Powertools for AWS Lambda (Python)

- Agent service – Hosted on a Lambda function, the agent service works asynchronously to manage threat analysis workflows, processing diagrams and maintaining execution state in DynamoDB

Agent service workflow

The agent service is built on LangGraph by LangChain, with which we can orchestrate complex workflows through a graph-based structure. This approach incorporates two key design patterns:

- Separation of concerns – The threat modeling process is decomposed into discrete, specialized steps that can be executed independently and iteratively. Each node in the graph represents a specific function, such as image processing, asset identification, data flow analysis, or threat enumeration.

- Structured output – Each component in the workflow produces standardized, well-defined outputs that serve as inputs to subsequent steps, providing consistency and facilitating downstream integrations for consistent representation.

The agent workflow follows a directed graph where processing begins at the Start node and proceeds through several specialized stages, as illustrated in the following diagram.

The workflow includes the following nodes:

- Image processing – The Image processing node processes the architecture diagram image and converts it in the appropriate format for the LLM to consume

- Assets – This information, along with textual descriptions, feeds into the Assets node, which identifies and catalogs system components

- Flows – The workflow then progresses to the Flows node, mapping data movements and trust boundaries between components

- Threats – Lastly, the Threats node uses this information to identify potential vulnerabilities and attack vectors

A critical innovation in our agent architecture is the adaptive iteration mechanism implemented through conditional edges in the graph. This feature addresses one of the fundamental challenges in LLM-based threat modeling: controlling the comprehensiveness and depth of the analysis.

The conditional edge after the Threats node enables two powerful operational modes:

- User-controlled iteration – In this mode, the user specifies the number of iterations the agent should perform. With each pass through the loop, the agent enriches the threat catalog by analyzing edge cases that might have been overlooked in previous iterations. This approach gives security professionals direct control over the thoroughness of the analysis.

- Autonomous gap analysis – In fully agentic mode, a specialized gap analysis component evaluates the current threat catalog. This component identifies potential blind spots or underdeveloped areas in the threat model and triggers additional iterations until it determines the threat catalog is sufficiently comprehensive. The agent essentially performs its own quality assurance, continuously refining its output until it meets predefined completeness criteria.

Prerequisites

Before you deploy Threat Designer, make sure you have the required prerequisites in place. For more information, refer to the GitHub repo.

Get started with Threat Designer

To start using Threat Designer, follow the step-by-step deployment instructions from the project’s README available in GitHub. After you deploy the solution, you’re ready to create your first threat model. Log in and complete the following steps:

- Choose Submit threat model to initiate a new threat model.

- Complete the submission form with your system details:

- Required fields: Provide a title and architecture diagram image.

- Recommended fields: Provide a solution description and assumptions (these significantly improve the quality of the threat model).

- Configure analysis parameters:

- Choose your iteration mode:

- Auto (default): The agent intelligently determines when the threat catalog is comprehensive.

- Manual: Specify up to 15 iterations for more control.

- Configure your reasoning boost to specify how much time the model spends on analysis (available when using Anthropic’s Claude Sonnet 3.7).

- Choose your iteration mode:

- Choose Start threat modeling to launch the analysis.

You can monitor progress through the intuitive interface, which displays each execution step in real time. The complete analysis typically takes between 5–15 minutes, depending on system complexity and selected parameters.

When the analysis is complete, you will have access to a comprehensive threat model that you can explore, refine, and export.

Clean up

To avoid incurring future charges, delete the solution by running the ./destroy.sh script. Refer to the README for more details.

Conclusion

In this post, we demonstrated how generative AI transforms threat modeling from an exclusive, expert-driven process into an accessible security practice for all development teams. By using FMs through our Threat Designer solution, we’ve democratized sophisticated security analysis, enabling organizations to identify vulnerabilities earlier and more consistently. This AI-powered approach removes the traditional barriers of time, expertise, and scalability, making shift-left security a practical reality rather than just an aspiration—ultimately building more resilient systems without sacrificing development velocity.

Deploy Threat Designer following the README instructions, upload your architecture diagram, and quickly receive AI-generated security insights. This streamlined approach helps you integrate proactive security measures into your development process without compromising speed or innovation—making comprehensive threat modeling accessible to teams of different sizes.

About the Authors

Edvin Hallvaxhiu is a senior security architect at Amazon Web Services, specialized in cybersecurity and automation. He helps customers design secure, compliant cloud solutions.

Edvin Hallvaxhiu is a senior security architect at Amazon Web Services, specialized in cybersecurity and automation. He helps customers design secure, compliant cloud solutions.

Sindi Cali is a consultant with AWS Professional Services. She supports customers in building data-driven applications in AWS.

Sindi Cali is a consultant with AWS Professional Services. She supports customers in building data-driven applications in AWS.

Aditi Gupta is a Senior Global Engagement Manager at AWS ProServe. She specializes in delivering impactful Big Data and AI/ML solutions that enable AWS customers to maximize their business value through data utilization.

Aditi Gupta is a Senior Global Engagement Manager at AWS ProServe. She specializes in delivering impactful Big Data and AI/ML solutions that enable AWS customers to maximize their business value through data utilization.

Rahul Shaurya is a Principal Data Architect at Amazon Web Services. He helps and works closely with customers building data platforms and analytical applications on AWS.

Rahul Shaurya is a Principal Data Architect at Amazon Web Services. He helps and works closely with customers building data platforms and analytical applications on AWS.

Source: Read MoreÂ