Amazon SageMaker now offers fully managed support for MLflow 3.0 that streamlines AI experimentation and accelerates your generative AI journey from idea to production. This release transforms managed MLflow from experiment tracking to providing end-to-end observability, reducing time-to-market for generative AI development.

As customers across industries accelerate their generative AI development, they require capabilities to track experiments, observe behavior, and evaluate performance of models and AI applications. Data scientists and developers struggle to effectively analyze the performance of their models and AI applications from experimentation to production, making it hard to find root causes and resolve issues. Teams spend more time integrating tools than improving the quality of their models or generative AI applications.

With the launch of fully managed MLflow 3.0 on Amazon SageMaker AI, you can accelerate generative AI development by making it easier to track experiments and observe behavior of models and AI applications using a single tool. Tracing capabilities in fully managed MLflow 3.0 provide customers the ability to record the inputs, outputs, and metadata at every step of a generative AI application, so developers can quickly identify the source of bugs or unexpected behaviors. By maintaining records of each model and application version, fully managed MLflow 3.0 offers traceability to connect AI responses to their source components, which means developers can quickly trace an issue directly to the specific code, data, or parameters that generated it. With these capabilities, customers using Amazon SageMaker HyperPod to train and deploy foundation models (FMs) can now use managed MLflow to track experiments, monitor training progress, gain deeper insights into the behavior of models and AI applications, and manage their machine learning (ML) lifecycle at scale. This reduces troubleshooting time and enables teams to focus more on innovation.

This post walks you through the core concepts of fully managed MLflow 3.0 on SageMaker and provides technical guidance on how to use the new features to help accelerate your next generative AI application development.

Getting started

You can get started with fully managed MLflow 3.0 on Amazon SageMaker to track experiments, manage models, and streamline your generative AI/ML lifecycle through the AWS Management Console, AWS Command Line Interface (AWS CLI), or API.

Prerequisites

To get started, you need:

- An AWS account with billing enabled

- An Amazon SageMaker Studio AI domain. To create a domain, refer to Guide to getting set up with Amazon SageMaker AI.

Configure your environment to use SageMaker managed MLflow Tracking Server

To perform the configuration, follow these steps:

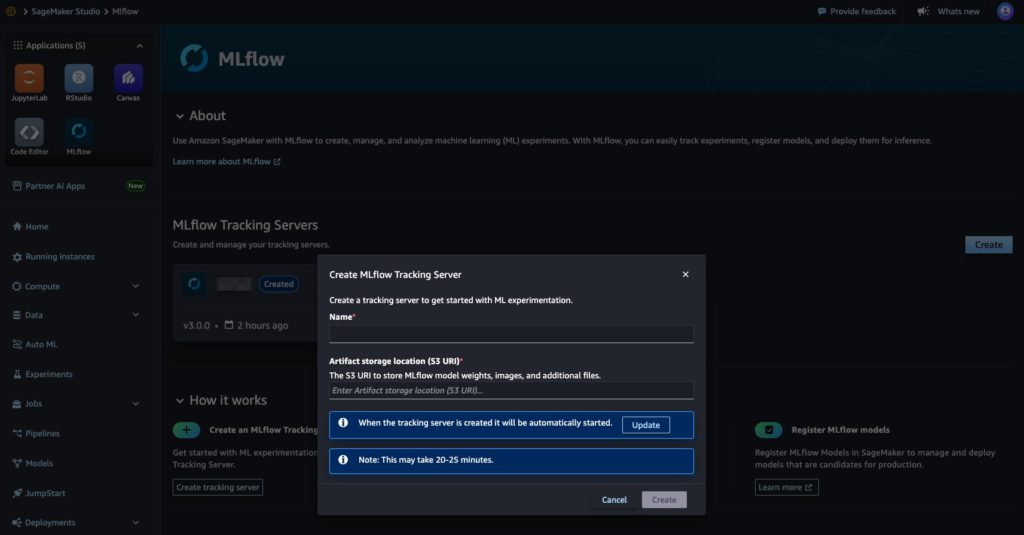

- In the SageMaker Studio UI, in the Applications pane, choose MLflow and choose Create.

- Enter a unique name for your tracking server and specify the Amazon Simple Storage Service (Amazon S3) URI where your experiment artifacts will be stored. When you’re ready, choose Create. By default, SageMaker will select version

3.0to create the MLflow tracking server. - Optionally, you can choose Update to adjust settings such as server size, tags, or AWS Identity and Access Management (IAM) role.

The server will now be provisioned and started automatically, typically within 25 minutes. After setup, you can launch the MLflow UI from SageMaker Studio to start tracking your ML and generative AI experiments. For more details on tracking server configurations, refer to Machine learning experiments using Amazon SageMaker AI with MLflow in the SageMaker Developer Guide.

To begin tracking your experiments with your newly created SageMaker managed MLflow tracking server, you need to install both MLflow and the AWS SageMaker MLflow Python packages in your environment. You can use SageMaker Studio managed Jupyter Lab, SageMaker Studio Code Editor, a local integrated development environment (IDE), or other supported environment where your AI workloads operate to track with SageMaker managed MLFlow tracking server.

To install both Python packages using pip:pip install mlflow==3.0 sagemaker-mlflow==0.1.0

To connect and start logging your AI experiments, parameters, and models directly to the managed MLflow on SageMaker, replace the Amazon Resource Name (ARN) of your SageMaker MLflow tracking server:

Now your environment is configured and ready to track your experiments with your SageMaker Managed MLflow tracking server.

Implement generative AI application tracing and version tracking

Generative AI applications have multiple components, including code, configurations, and data, which can be challenging to manage without systematic versioning. A LoggedModel entity in managed MLflow 3.0 represents your AI model, agent, or generative AI application within an experiment. It provides unified tracking of model artifacts, execution traces, evaluation metrics, and metadata throughout the development lifecycle. A trace is a log of inputs, outputs, and intermediate steps from a single application execution. Traces provide insights into application performance, execution flow, and response quality, enabling debugging and evaluation. With LoggedModel, you can track and compare different versions of your application, making it easier to identify issues, deploy the best version, and maintain a clear record of what was deployed and when.

To implement version tracking and tracing with managed MLflow 3.0 on SageMaker, you can establish a versioned model identity using a Git commit hash, set this as the active model context so all subsequent traces will be automatically linked to this specific version, enable automatic logging for Amazon Bedrock interactions, and then make an API call to Anthropic’s Claude 3.5 Sonnet that will be fully traced with inputs, outputs, and metadata automatically captured within the established model context. Managed MLflow 3.0 tracing is already integrated with various generative AI libraries and provides one-line automatic tracing experience for all the support libraries. For information about supported libraries, refer to Supported Integrations in the MLflow documentation.

After logging this information, you can track these generative AI experiments and the logged model for the agent in the managed MLflow 3.0 tracking server UI, as shown in the following screenshot.

In addition to the one-line auto tracing functionality, MLflow offers Python SDK for manually instrumenting your code and manipulating traces. Refer to the code sample notebook sagemaker_mlflow_strands.ipynb in the aws-samples GitHub repository, where we use MLflow manual instrumentation to trace Strands Agents. With tracing capabilities in fully managed MLflow 3.0, you can record the inputs, outputs, and metadata associated with each intermediate step of a request, so you can pinpoint the source of bugs and unexpected behaviors.

These capabilities provide observability in your AI workload by capturing detailed information about the execution of the workload services, nodes, and tools that you can see under the Traces tab.

You can inspect each trace, as shown in the following image, by choosing the request ID in the traces tab for the desired trace.

Fully managed MLflow 3.0 on Amazon SageMaker also introduces the capability to tag traces. Tags are mutable key-value pairs you can attach to traces to add valuable metadata and context. Trace tags make it straightforward to organize, search, and filter traces based on criteria such as user session, environment, model version, or performance characteristics. You can add, update, or remove tags at any stage—during trace execution using mlflow.update_current_trace() or after a trace is logged using the MLflow APIs or UI. Managed MLflow 3.0 makes it seamless to search and analyze traces, helping teams quickly pinpoint issues, compare agent behaviors, and optimize performance. The tracing UI and Python API both support powerful filtering, so you can drill down into traces based on attributes such as status, tags, user, environment, or execution time as shown in the screenshot below. For example, you can instantly find all traces with errors, filter by production environment, or search for traces from a specific request. This capability is essential for debugging, cost analysis, and continuous improvement of generative AI applications.

The following screenshot displays the traces returned when searching for the tag ‘Production’.

The following code snippet shows how you can use search for all traces in production with a successful status:

Generative AI use case walkthrough with MLflow tracing

Building and deploying generative AI agents such as chat-based assistants, code generators, or customer support assistants requires deep visibility into how these agents interact with large language models (LLMs) and external tools. In a typical agentic workflow, the agent loops through reasoning steps, calling LLMs and using tools or subsystems such as search APIs or Model Context Protocol (MCP) servers until it completes the user’s task. These complex, multistep interactions make debugging, optimization, and cost tracking especially challenging.

Traditional observability tools fall short in generative AI because agent decisions, tool calls, and LLM responses are dynamic and context-dependent. Managed MLflow 3.0 tracing provides comprehensive observability by capturing every LLM call, tool invocation, and decision point in your agent’s workflow. You can use this end-to-end trace data to:

- Debug agent behavior – Pinpoint where an agent’s reasoning deviates or why it produces unexpected outputs.

- Monitor tool usage – Discover how and when external tools are called and analyze their impact on quality and cost.

- Track performance and cost – Measure latency, token usage, and API costs at each step of the agentic loop.

- Audit and govern – Maintain detailed logs for compliance and analysis.

Imagine a real-world scenario using the managed MLflow 3.0 tracing UI for a sample finance customer support agent equipped with a tool to retrieve financial data from a datastore. While you’re developing a generative AI customer support agent or analyzing the agent behavior in production, you can observe how agent responses and the execution optionally call a product database tool for more accurate recommendations. For illustration, the first trace, shown in the following screenshot, shows the agent handling a user query without invoking any tools. The trace captures the prompt, agent response, and agent decision points. The agent’s response lacks product-specific details. The trace makes it clear that no external tool was called, and you quickly identify the behavior in the agent’s reasoning chain.

The second trace, shown in the following screenshot, captures the same agent, but this time it decides to call the product database tool. The trace logs the tool invocation, the returned product data, and how the agent incorporates this information into its final response. Here, you can observe improved answer quality, a slight increase in latency, and additional API cost with higher token usage.

By comparing these traces side by side, you can debug why the agent sometimes skips using the tool, optimize when and how tools are called, and balance quality against latency and cost. MLflow’s tracing UI makes these agentic loops transparent, actionable, and seamless to analyze at scale. This post’s sample agent and all necessary code is available on the aws-samples GitHub repository, where you can replicate and adapt it for your own applications.

Cleanup

After it’s created, a SageMaker managed MLflow tracking server will incur costs until you delete or stop it. Billing for tracking servers is based on the duration the servers have been running, the size selected, and the amount of data logged to the tracking servers. You can stop tracking servers when they’re not in use to save costs, or you can delete them using API or the SageMaker Studio UI. For more details on pricing, refer to Amazon SageMaker pricing.

Conclusion

Fully managed MLflow 3.0 on Amazon SageMaker AI is now available. Get started with sample code in the aws-samples GitHub repository. We invite you to explore this new capability and experience the enhanced efficiency and control it brings to your ML projects. To learn more, visit Machine Learning Experiments using Amazon SageMaker with MLflow.

For more information, visit the SageMaker Developer Guide and send feedback to AWS re:Post for SageMaker or through your usual AWS Support contacts.

About the authors

Ram Vittal is a Principal ML Solutions Architect at AWS. He has over 3 decades of experience architecting and building distributed, hybrid, and cloud applications. He is passionate about building secure, scalable, reliable AI/ML and big data solutions to help enterprise customers with their cloud adoption and optimization journey to improve their business outcomes. In his spare time, he rides motorcycle and walks with his three-year old sheep-a-doodle!

Ram Vittal is a Principal ML Solutions Architect at AWS. He has over 3 decades of experience architecting and building distributed, hybrid, and cloud applications. He is passionate about building secure, scalable, reliable AI/ML and big data solutions to help enterprise customers with their cloud adoption and optimization journey to improve their business outcomes. In his spare time, he rides motorcycle and walks with his three-year old sheep-a-doodle!

Sandeep Raveesh is a GenAI Specialist Solutions Architect at AWS. He works with customer through their AIOps journey across model training, Retrieval-Augmented-Generation (RAG), GenAI Agents, and scaling GenAI use-cases. He also focuses on Go-To-Market strategies helping AWS build and align products to solve industry challenges in the GenerativeAI space. You can find Sandeep on LinkedIn.

Sandeep Raveesh is a GenAI Specialist Solutions Architect at AWS. He works with customer through their AIOps journey across model training, Retrieval-Augmented-Generation (RAG), GenAI Agents, and scaling GenAI use-cases. He also focuses on Go-To-Market strategies helping AWS build and align products to solve industry challenges in the GenerativeAI space. You can find Sandeep on LinkedIn.

Amit Modi is the product leader for SageMaker AIOps and Governance, and Responsible AI at AWS. With over a decade of B2B experience, he builds scalable products and teams that drive innovation and deliver value to customers globally.

Amit Modi is the product leader for SageMaker AIOps and Governance, and Responsible AI at AWS. With over a decade of B2B experience, he builds scalable products and teams that drive innovation and deliver value to customers globally.

Rahul Easwar is a Senior Product Manager at AWS, leading managed MLflow and Partner AI Apps within the SageMaker AIOps team. With over 15 years of experience spanning startups to enterprise technology, he leverages his entrepreneurial background and MBA from Chicago Booth to build scalable ML platforms that simplify AI adoption for organizations worldwide. Connect with Rahul on LinkedIn to learn more about his work in ML platforms and enterprise AI solutions.

Rahul Easwar is a Senior Product Manager at AWS, leading managed MLflow and Partner AI Apps within the SageMaker AIOps team. With over 15 years of experience spanning startups to enterprise technology, he leverages his entrepreneurial background and MBA from Chicago Booth to build scalable ML platforms that simplify AI adoption for organizations worldwide. Connect with Rahul on LinkedIn to learn more about his work in ML platforms and enterprise AI solutions.

Source: Read MoreÂ