Amazon’s Finance Technologies Tax team (FinTech Tax) manages mission-critical services for tax computation, deduction, remittance, and reporting across global jurisdictions. The Application processes billions of transactions annually across multiple international marketplaces.

In this post, we show how the team implemented tiered tax withholding using Amazon DynamoDB transactions and conditional writes. By using these DynamoDB features, they built an extensible, resilient, and event-driven tax computation service that delivers millisecond latency at scale. We also explore the architectural decisions and implementation details that enable consistent performance while maintaining strict data accuracy.

Requirements

Amazon operates in a complex fintech tax landscape spanning multiple jurisdictions, where it must manage diverse withholding tax requirements. The company requires a robust tax processing solution to handle its massive transaction volume. The system needs to process millions of daily transactions in real time while maintaining precise records of cumulative transaction values per individual for withholding tax calculations. Key requirements include applying tiered withholding tax rates accurately and providing seamless integration with Amazon’s existing systems. The solution must maintain data consistency and high availability while supporting regulatory compliance across different withholding tax regimes.

Challenges

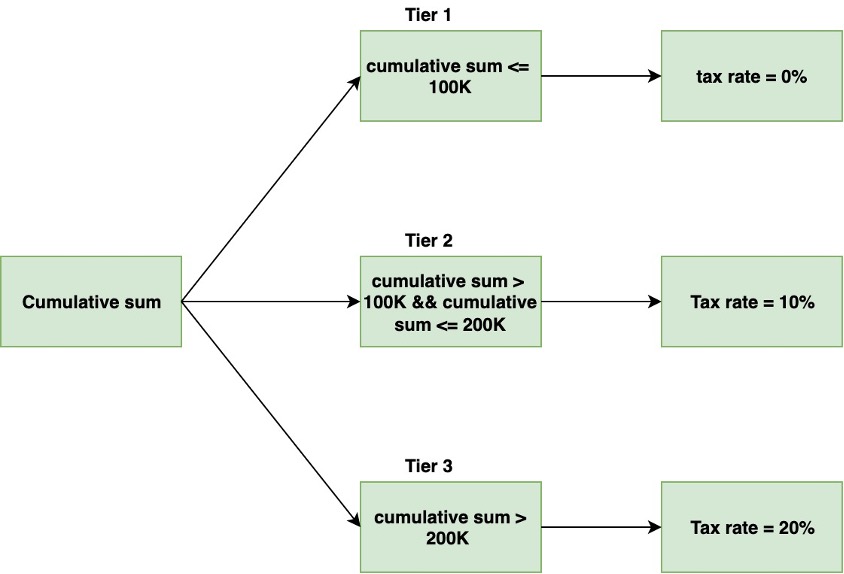

The primary challenge lies in navigating the intricate web of tax laws globally and maintaining strict compliance, particularly with the tiered rate model. Under this model, different tax withholding rates are applied based on an individual’s total transaction value crossing specific thresholds within a financial year. As an individual’s cumulative transaction value increases and crosses predefined thresholds, the tax withholding rate applied to their transactions changes accordingly. For example, a lower rate might be applied to transactions until the total value reaches 100,000 Indian Rupees (INR), after which a higher rate is applied for transactions exceeding that threshold.

The following figure illustrates a three-tier pricing model demonstrating progressive rate changes based on cumulative usage thresholds.

The challenge with the tiered rate model lies in accurately tracking and maintaining records of each individual’s cumulative transaction value while doing live calculation of withholding. Amazon must process millions of transactions per day and make sure that the correct withholding rate is applied to forward/reverse transaction (such as positive/negative accounting adjustment) in real time. This requires a system capable of handling high transaction volumes (approximately 150 transactions per second for an individual) while maintaining accurate records.

Overview of solution

The following diagram shows the high-level architecture of the Withholding Tax Computation service at Amazon.

The workflow consists of the following steps:

- A client sends a withholding tax computation request to the Amazon API Gateway.

- API Gateway invokes the Tax Computation AWS Lambda

- The Tax Computation Lambda function retrieves the individual’s Cumulative Transaction Store (DynamoDB). The Cumulative Transaction Store table maintains real-time, per-user running cumulative transaction values, based on the previous values of those totals. It enables accurate tracking of individual totals for applying tiered tax rates.

- The Lambda function fetches the applicable tax rates from the rule engine library based on the transaction details and the individual’s total. The tax amount is computed based on the retrieved tax rates and transaction data.

- The computation result is stored in the Transaction Audit Store (DynamoDB) for auditing and historical purposes.

- The individual’s running tally is updated in the Cumulative Transaction Store based on the current transaction value.

- All transient errors, including

ConditionalCheckFailedandTransactionConflictexceptions that occur during DynamoDB operations, are sent to an Amazon Simple Queue Service (Amazon SQS) queue for retry. - Non-transient errors, such as those caused by client errors (for example, 400 Validation Exception, 401 Unauthorized, 403 Forbidden) or permanent server issues, are handled through a different SQS DLQ.

Implementation considerations

Upon receiving a transaction, the system evaluates the individual’s cumulative transaction value against the threshold derived from the rule engine to determine the applicable tax rate. The cumulative value is then updated in the cumulative transaction store along with maintaining the audit trail.

The challenge arises when multiple threads attempt to update the database concurrently for the same individual. A typical optimistic concurrency control (OCC) strategy would be to read the cumulative value, calculate the tax rate for values in the given range, then write the transaction conditional that the cumulative value had not changed since the read. If the value has changed, restart the loop. With higher traffic this can lead to many restarts.

Our approach adjusts the typical OCC pattern to have the conditional only be that the cumulative value remains in the initially observed range. Changes to the cumulative value don’t require a restart of the loop unless the value has exceeded the threshold. This method allows higher throughput due to fewer condition failures. If the individual’s value has moved to a higher range, the write operation will be unsuccessful, necessitating a new read and write retry with the updated value.

Unlike OCC strategies, this approach succeeds even if the value has changed since the last read, minimizing conflicts and improving throughput. Although the conditional write may occasionally fail due to concurrent updates (where cumulative sum crosses the threshold), resulting in a ConditionalCheckFailedException, this is expected and doesn’t indicate data inconsistency.

To handle transient errors and prevent duplicate processing of the same transaction, a transactWrite is performed, which includes a client request token (CRT) that makes increments idempotent. TransactionCanceledException exceptions are handled though error handling mechanisms like exponential backoff.

This combination of strategies enables our system to maintain data consistency while achieving high throughput and scalability. It eliminates the need for complex locking mechanisms, improves efficiency compared to traditional OCC solutions, and provides a flexible and performant solution adaptable to varying transaction volumes and concurrency levels without extensive configuration or tuning.

Cumulative Transaction Store

The Cumulative Transaction Store table is used to maintain the cumulative sum of transaction value for a particular individual. We use the following data model:

Tax Deduction Audit Store

The Tax Deduction Audit Store table is used to store the audit record of tax deduction rate for each transaction. We use the following data model:

Code for conditional writes

The following code demonstrates an atomic conditional write operation across both DynamoDB tables using dynamodb.transact_write_items(). It retrieves an existing record from the Cumulative Transaction Store and calculates updated values for cumulative_amt_consumed based on the current transaction value and existing data. Simultaneously, it prepares a new record for the Transaction Audit Store, capturing transaction details like ID, value, tax amount, and tax rate.

The transact_write_items() method then performs an update on the Cumulative Transaction Store table and a put operation on the Tax Deduction Audit table as a single transaction. If both operations succeed, changes are committed to both tables; otherwise, the entire transaction rolls back, providing data consistency.

Results

The performance evaluation of the system involved conducting a series of tests with fixed runtime of 30 seconds and varying thread counts, while resetting the Cumulative Transaction Store to zero after each execution. This approach allowed for a comprehensive analysis of the system’s behavior under different load conditions.

We observed a consistent increase in transactions processed per second as we scaled from 1 to 130 threads, demonstrating system’s ability to effectively handle increased concurrency. However, this improved throughput came with a corresponding rise in transient conflicts per second, highlighting the trade-off between performance and conflict management in highly concurrent scenarios.

Transient conflicts occur when multiple transactions simultaneously attempt to update the same items, leading to the cancellation of some transactions. This data indicates that beyond a certain point, adding more threads might not significantly improve throughput due to the increased overhead of managing conflicts.

The following graph illustrates the correlation between thread count and transaction metrics, demonstrating how throughput and conflict rates scale with increasing concurrent threads.

Conclusion

In this post, we demonstrated how the Amazon Fintech team successfully implemented a simplified and highly scalable solution for our tiered tax rate application by using the powerful conditional write feature in DynamoDB. By embracing this approach and proactively handling the occasional ConditionalCheckFailedException, our system can maintain data consistency while achieving high throughput and scalability, even in scenarios with a high volume of concurrent transactions.

This solution elegantly eliminates the need for optimistic locking, which can become a bottleneck as the number of concurrent requests increases. Instead, the Amazon Fintech system relies on the built-in concurrency control mechanisms of DynamoDB, providing data consistency and enabling efficient updates even under high load conditions.

To get started with implementing your own scalable transaction processing system, explore the DynamoDB conditional updates feature. For additional guidance, check out the DynamoDB documentation or reach out to AWS Support with any questions.

About the Authors

Jason Hunter is a California-based Principal Solutions Architect specializing in Amazon DynamoDB. He’s been working with NoSQL databases since 2003. He’s known for his contributions to Java, open source, and XML. You can find more DynamoDB posts and others posts written by Jason Hunter in the AWS Database Blog.

Jason Hunter is a California-based Principal Solutions Architect specializing in Amazon DynamoDB. He’s been working with NoSQL databases since 2003. He’s known for his contributions to Java, open source, and XML. You can find more DynamoDB posts and others posts written by Jason Hunter in the AWS Database Blog.

Balajikumar Gopalakrishnan is a Principal Engineer at Amazon Finance Technology. He has been with Amazon since 2013, solving real-world challenges through technology that directly impact the lives of Amazon customers. Outside of work, Balaji enjoys hiking, painting, and spending time with his family. He is also a movie buff!

Balajikumar Gopalakrishnan is a Principal Engineer at Amazon Finance Technology. He has been with Amazon since 2013, solving real-world challenges through technology that directly impact the lives of Amazon customers. Outside of work, Balaji enjoys hiking, painting, and spending time with his family. He is also a movie buff!

Jay Joshi is a Software Development Engineer at Amazon Finance Technology. He has been with Amazon since 2020, where he is mainly involved in building platforms for tax computation and reporting across global jurisdictions. Outside his professional life, he enjoys spending time with his family and friends, exploring new culinary destinations, and playing badminton.

Jay Joshi is a Software Development Engineer at Amazon Finance Technology. He has been with Amazon since 2020, where he is mainly involved in building platforms for tax computation and reporting across global jurisdictions. Outside his professional life, he enjoys spending time with his family and friends, exploring new culinary destinations, and playing badminton.

Arjun Choudhary works as a Software Development Engineer in Amazon’s Finance Technology division since 2019. His primary focus is developing platforms for global direct tax withholding. Outside of work, Arjun enjoys reading novels and playing cricket and volleyball

Arjun Choudhary works as a Software Development Engineer in Amazon’s Finance Technology division since 2019. His primary focus is developing platforms for global direct tax withholding. Outside of work, Arjun enjoys reading novels and playing cricket and volleyball

Source: Read More