LLMs primarily enhance accuracy through scaling pre-training data and computing resources. However, the attention has shifted towards alternate scaling due to finite data availability. This includes test-time training and inference compute scaling. Reasoning models enhance performance by emitting thought processes before answers, initially through CoT prompting. Recently, reinforcement learning (RL) post-training has been used. Scientific domains present ideal opportunities for reasoning models. The reason is they involve “inverse problems” where solution quality assessment is straightforward but solution generation remains challenging. Despite conceptual alignment between structured scientific reasoning and model capabilities, current methods lack detailed approaches for scientific reasoning beyond multiple-choice benchmarks.

Technical Evolution of Reasoning Architectures

Reasoning models have evolved from early prompt-based methods such as CoT, zero-shot CoT, and Tree of Thought. They have progressed to complex RL approaches via Group Relative Policy Optimization (GRPO) and inference time scaling. Moreover, reasoning models in chemistry focus on knowledge-based benchmarks rather than complex reasoning tasks. Examples include retrosynthesis or molecular design. While datasets such as GPQA-D and MMLU assess chemical knowledge, they fail to evaluate complex chemical reasoning capabilities. Current scientific reasoning efforts remain fragmented. Limited attempts include OmniScience for general science, Med-R1 for medical vision-language tasks, and BioReason for genomic reasoning. However, no comprehensive framework exists for large-scale chemical reasoning model training.

ether0 Architecture and Design Principles

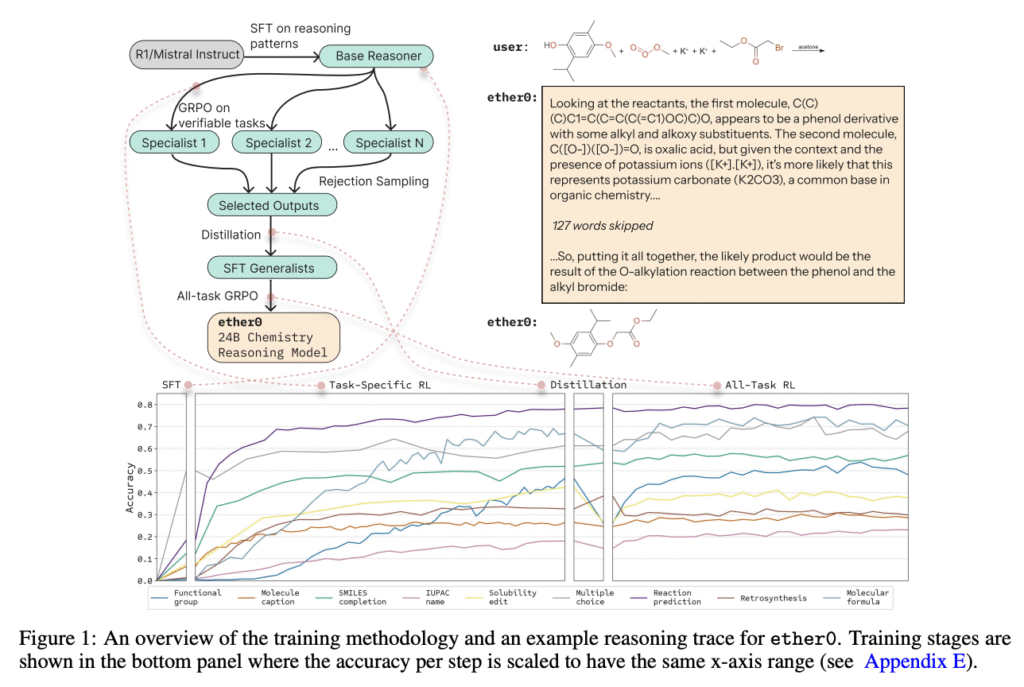

Researchers from FutureHouse have proposed ether0, a novel model that reasons in natural language and outputs molecular structures as SMILES strings. It demonstrates the efficacy of reasoning models in chemical tasks. It outperforms frontier LLMs, human experts, and general chemistry models. The training approach uses several optimizations over vanilla RL. This includes distillation of reasoning behavior, a dynamic curriculum, and expert model initialization to enhance efficiency and effectiveness. Moreover, factors such as data efficiency, failure modes, and reasoning behavior are analyzed. This analysis allows for a better understanding of the reasoning utility in solving chemistry problems.

Training Pipeline: Distillation and GRPO Integration

The model employs a multi-stage training procedure alternating between distillation and GRPO phases. The architecture introduces four special tokens. These tokens demarcate reasoning and answer boundaries. Training begins with SFT on long CoT sequences generated by DeepSeek-R1. These are filtered for valid SMILES format, and reasoning quality. Specialist RL then optimizes task-specific policies for different problem categories using GRPO. Then, distillation merges specialist models into a generalist. This merges occurs through SFT on correct responses collected throughout training. The final phase applies generalist GRPO to the merged model. This includes continuous quality filtering to remove low-quality reasoning and undesirable molecular substructures.

Performance Evaluation and Comparative Benchmarks

Ether0 demonstrates superior performance against both general-purpose LLMs like Claude and o1, and chemistry-specific models, including ChemDFM and TxGemma. It achieves the highest accuracy across all open-answer categories while maintaining competitive performance on multiple-choice questions. For data efficiency, the model outperforms traditional molecular transformer models. It is trained on only 60,000 reactions compared to full USPTO datasets. Ether0 achieves 70% accuracy after seeing 46,000 training examples. Molecular transformers achieved 64.1% on complete datasets in comparison. Under one-shot prompting conditions, ether0 surpasses all evaluated frontier models. Safety alignment procedures successfully filter 80% of unsafe questions without degrading performance on core chemistry tasks.

Conclusion: Implications for Future Scientific LLMs

In conclusion, researchers introduced ether0, a 24B-parameter model trained on ten challenging molecular tasks. It significantly outperforms frontier LLMs, domain experts, and specialized models. This is achieved through its interleaved RL and behavior distillation pipeline. The model exhibits exceptional data efficiency and reasoning capabilities. It excels in open-answer chemistry tasks involving molecular design, completion, modification, and synthesis. However, limitations include potential generalization challenges beyond organic chemistry. Moreover, there is a loss of general instruction-following and absence of tool-calling integration. The release of model weights, benchmark data, and reward functions establishes a foundation. This foundation aids in advancing scientific reasoning models across diverse domains.

Check out the Paper and Technical details. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 99k+ ML SubReddit and Subscribe to our Newsletter.

Want to promote your product/webinar/service to 1 Million+ AI Engineers/Developers/Data Scientists/Architects/CTOs/CIOs? Lets Partner..

Want to promote your product/webinar/service to 1 Million+ AI Engineers/Developers/Data Scientists/Architects/CTOs/CIOs? Lets Partner..

The post ether0: A 24B LLM Trained with Reinforcement Learning RL for Advanced Chemical Reasoning Tasks appeared first on MarkTechPost.

Source: Read MoreÂ