Artificial intelligence has undergone a significant transition from basic language models to advanced models that focus on reasoning tasks. These newer systems, known as Large Reasoning Models (LRMs), represent a class of tools designed to simulate human-like thinking by producing intermediate reasoning steps before arriving at conclusions. The focus has moved from generating accurate outputs to understanding the process that leads to these answers. This shift has raised questions about how these models manage tasks with layered complexity and whether they truly possess reasoning abilities or are simply leveraging training patterns to guess outcomes.

Redefining Evaluation: Moving Beyond Final Answer Accuracy

A recurring problem with evaluating machine reasoning is that traditional benchmarks mostly assess the final answer without examining the steps involved in arriving at it. Final answer accuracy alone does not reveal the quality of internal reasoning, and many benchmarks are contaminated with data that may have been seen during training. This creates a misleading picture of a model’s true capabilities. To explore actual reasoning, researchers require environments where problem difficulty can be precisely controlled and intermediate steps can be analyzed. Without such settings, it is hard to determine whether these models can generalize solutions or merely memorize patterns.

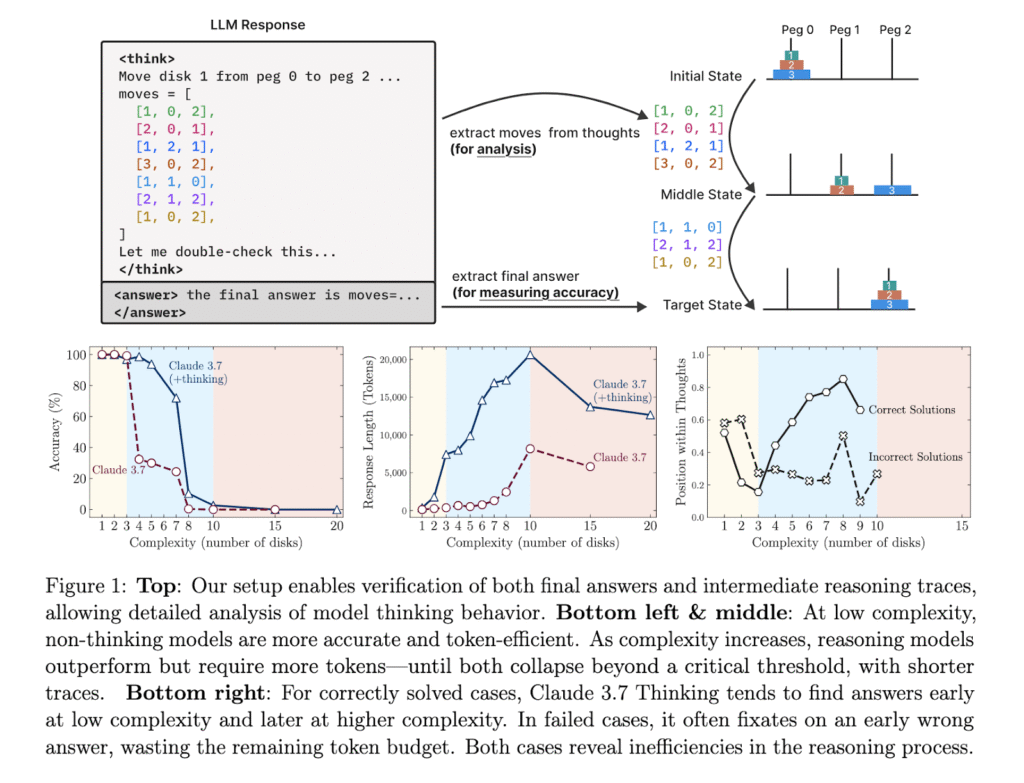

To evaluate reasoning more reliably, the research team at Apple designed a setup using four puzzle environments: Tower of Hanoi, River Crossing, Checkers Jumping, and Blocks World. These puzzles allow precise manipulation of complexity by changing elements such as the number of disks, checkers, or agents involved. Each task requires different reasoning abilities, such as constraint satisfaction and sequential planning. Importantly, these environments are free from typical data contamination, enabling thorough checks of both outcomes and the reasoning steps in between. This method ensures a detailed investigation of how models behave across varied task demands.

The research introduced a comparative study using two sets of models: Claude 3.7 Sonnet and DeepSeek-R1, along with their “thinking” variants and their standard LLM counterparts. These models were tested across the puzzles under identical token budgets to measure both accuracy and reasoning efficiency. This helped reveal performance shifts across low, medium, and high-complexity tasks. One of the most revealing observations was the formation of three performance zones. In simple tasks, non-thinking models outperformed reasoning variants. For medium complexity, reasoning models gained an edge, while both types collapsed completely as complexity peaked.

Comparative Insights: Thinking vs. Non-Thinking Models Under Stress

An in-depth analysis revealed that reasoning effort increased with task difficulty up to a certain point but then declined despite the availability of resources. For instance, in the Tower of Hanoi, Claude 3.7 Sonnet (thinking) maintained high accuracy until complexity reached a certain threshold, after which performance dropped to zero. Even when these models were supplied with explicit solution algorithms, they failed to execute steps beyond specific complexity levels. In one case, Claude 3.7 could manage around 100 steps correctly for the Tower of Hanoi but was unable to complete simpler River Crossing tasks requiring only 11 moves when $N = 3$. This inconsistency exposed serious limitations in symbolic manipulation and exact computation.

The performance breakdown also highlighted how LRMs handle their internal thought process. Models frequently engaged in “overthinking,” generating correct intermediate solutions early in the process but continuing to explore incorrect paths. This led to inefficient use of tokens. At medium complexity levels, models began to find correct answers later in their reasoning chains. However, at high levels of complexity, they failed to produce accurate solutions. Quantitative analysis confirmed that solution accuracy dropped to near zero as the problem complexity increased, and the number of reasoning tokens allocated began to decline unexpectedly.

Scaling Limits and the Collapse of Reasoning

This research presents a sobering assessment of how current Learning Resource Management Systems (LRMs) operate. Research from Apple makes it clear that, despite some progress, today’s reasoning models are still far from achieving generalized reasoning. The work identifies how performance scales, where it collapses, and why over-reliance on benchmark accuracy fails to capture deeper reasoning behavior. Controlled puzzle environments have proven to be a powerful tool for uncovering hidden weaknesses in these systems and emphasizing the need for more robust designs in the future.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 99k+ ML SubReddit and Subscribe to our Newsletter.

The post Apple Researchers Reveal Structural Failures in Large Reasoning Models Using Puzzle-Based Evaluation appeared first on MarkTechPost.

Source: Read MoreÂ