Introduction

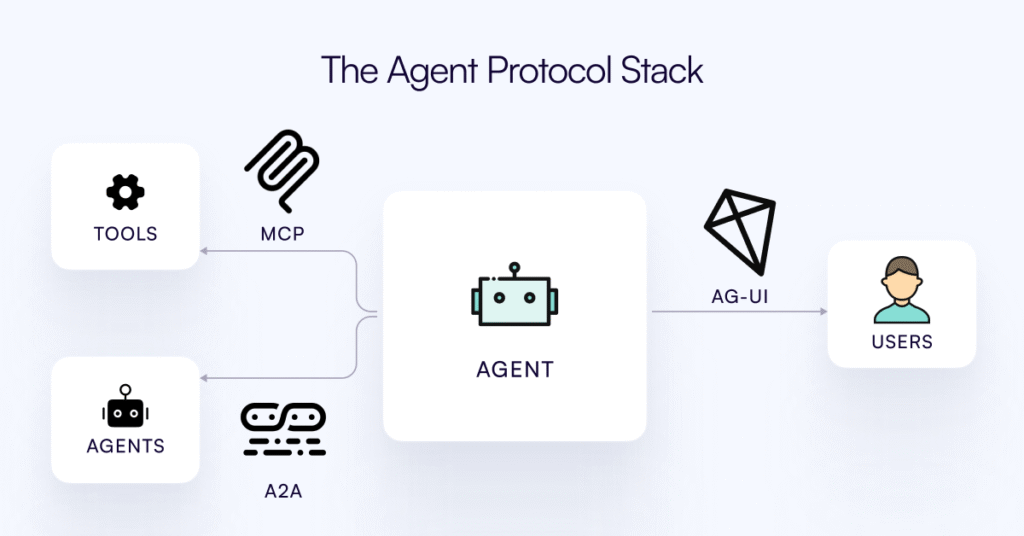

AI agents are increasingly moving from pure backend automators to visible, collaborative elements within modern applications. However, making agents genuinely interactive—capable of both responding to users and proactively guiding workflows—has long been an engineering headache. Each team ends up building custom communication channels, event handling, and state management, all for similar interaction needs.

The initial release of AG‑UI, announced in May 2025, served as a practical, open‑source proof-of-concept protocol for inline agent-user communication. It introduced a single-stream architecture—typically HTTP POST paired with Server-Sent Events (SSE)—and established a vocabulary of structured JSON events (e.g., TEXT_MESSAGE_CONTENT, TOOL_CALL_START, STATE_DELTA) that could drive interactive front-end components. The first version addressed core integration challenges—real-time streaming, tool orchestration, shared state, and standardized event handling—but users found that further formalization of event types, versioning, and framework support was needed for broader production use.

AG‑UI latest update proposes a different approach. Instead of yet another toolkit, it offers a lightweight protocol that standardizes the conversation between agents and user interfaces. This new version brings the protocol closer to production quality, improves event clarity, and expands compatibility with real‑world agent frameworks and clients.

What Sets AG-UI’s Latest Update Apart

AG-UI’s latest update is an incremental but meaningful step for agent-driven applications. Unlike earlier ad-hoc attempts at interactivity, the latest update of AG-UI is built around explicit, versioned events. The protocol isn’t tightly coupled to any particular stack; it’s designed to work with multiple agent backends and client types out of the box.

Key features in the latest update of AG-UI include:

- A formal set of ~16 event types, covering the full lifecycle of an agent—streamed outputs, tool invocations, state updates, user prompts, and error handling.

- Cleaner event schemas, allowing clients and agents to negotiate capabilities and synchronize state more reliably.

- More robust support for both direct (native) integration and adapter-based wrapping of legacy agents.

- Expanded documentation and SDKs that make the protocol practical for production use, not just experimentation.

Interactive Agents Require Consistency

Many AI agents today remain hidden in the backend, designed to handle requests and return results, with little regard for real-time user interaction. Making agents interactive means solving for several technical challenges:

- Streaming: Agents need to send incremental results or messages as soon as they’re available, not just at the end of a process.

- Shared State: Both agent and UI should stay in sync, reflecting changes as the task progresses.

- Tool Calls: Agents must be able to request external tools (such as APIs or user actions) and get results back in a structured way.

- Bidirectional Messaging: Users should be able to respond or guide the agent, not just passively observe.

- Security and Control: Tool invocation, cancellations, and error signals should be explicit and managed safely.

Without a shared protocol, every developer ends up reinventing these wheels—often imperfectly.

How the Latest Update of AG-UI Works

AG-UI’s latest update formalizes the agent-user interaction as a stream of typed events. Agents emit these events as they operate; clients subscribe to the stream, interpret the events, and send responses when needed.

The Event Stream

The core of the latest update of AG-UI is its event taxonomy. There are ~16 event types, including:

- message: Agent output, such as a status update or a chunk of generated text.

- function_call: Agent asks the client to run a function or tool, often requiring an external resource or user action.

- state_update: Synchronizes variables or progress information.

- input_request: Prompts the user for a value or choice.

- tool_result: Sends results from tools back to the agent.

- error and control: Signal errors, cancellations, or completion.

All events are JSON-encoded, typed, and versioned. This structure makes it straightforward to parse events, handle errors gracefully, and add new capabilities over time.

Integrating Agents and Clients

There are two main patterns for integration:

- Native: Agents are built or modified to emit AG-UI events directly during execution.

- Adapter: For legacy or third-party agents, an adapter module can intercept outputs and translate them into AG-UI events.

On the client side, applications open a persistent connection (usually via SSE or WebSocket), listen for events, and update their interface or send structured responses as needed.

The protocol is intentionally transport-agnostic, but supports real-time streaming for responsiveness.

Adoption and Ecosystem

Since its initial release, AG-UI has seen adoption among popular agent orchestration frameworks. AG‑UI latest version’s expanded event schema and improved documentation have accelerated integration efforts.

Current or in-progress integrations include:

- LangChain, CrewAI, Mastra, AG2, Agno, LlamaIndex: Each offers orchestration for agents that can now interactively surface their internal state and progress.

- AWS, A2A, ADK, AgentOps: Work is ongoing to bridge cloud, monitoring, and agent operation tools with AG-UI.

- Human Layer (Slack integration): Demonstrates how agents can become collaborative team members in messaging environments.

The protocol has gained traction with developers looking to avoid building custom socket handlers and event schemas for each project. It currently has more than 3,500 GitHub stars and is being used in a growing number of agent-driven products.

Developer Experience

The latest update of AG-UI is designed to minimize friction for both agent builders and frontend engineers.

- SDKs and Templates: The CLI tool npx create-ag-ui-app scaffolds a project with all dependencies and sample integrations included.

- Clear Schemas: Events are versioned and documented, supporting robust error handling and future extensibility.

- Practical Documentation: Real-world integration guides, example flows, and visual assets help reduce trial and error.

All resources and guides are available at AG-UI.com.

Use Cases

- Embedded Copilots: Agents that work alongside users in existing apps, providing suggestions and explanations as tasks evolve.

- Conversational UIs: Dialogue systems that maintain session state and support multi-turn interactions with tool usage.

- Workflow Automation: Agents that orchestrate sequences involving both automated actions and human-in-the-loop steps.

Conclusion

The latest update of AG-UI provides a well-defined, lightweight protocol for building interactive agent-driven applications. Its event-driven architecture abstracts away much of the complexity of agent-user synchronization, real-time communication, and state management. With explicit schemas, broad framework support, and a focus on practical integration, AG‑UI latest update enables development teams to build more reliable, interactive AI systems—without repeatedly solving the same low-level problems.

Developers interested in adopting the latest update of AG-UI can find SDKs, technical documentation, and integration assets at AG-UI.com.

CopilotKit team is also organizing a Webinar.

Support open-source and Star the AG-UI GitHub repo.

Discord Community: https://go.copilotkit.ai/AG-UI-Discord

Thanks to the CopilotKit team for the thought leadership/ Resources for this article. CopilotKit team has supported us in this content/article.

The post From Backend Automation to Frontend Collaboration: What’s New in AG-UI Latest Update for AI Agent-User Interaction appeared first on MarkTechPost.

Source: Read MoreÂ